LoRAs Galore: Create a LoRA Playground with Modal, Gradio, and S3

This example shows how to mount an S3 bucket in a Modal app using CloudBucketMount.

We will download a bunch of LoRA adapters from the HuggingFace Hub into our S3 bucket

then read from that bucket, on the fly, when doing inference.

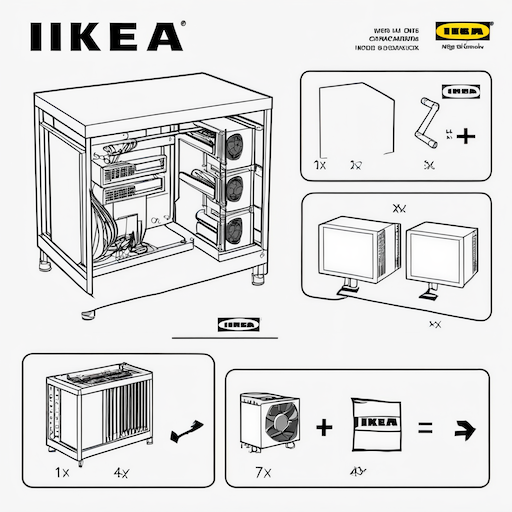

By default, we use the IKEA instructions LoRA as an example, which produces the following image when prompted to generate “IKEA instructions for building a GPU rig for deep learning”:

By the end of this example, we’ve deployed a “playground” app where anyone with a browser can try out these custom models. That’s the power of Modal: custom, autoscaling AI applications, deployed in seconds. You can try out our deployment here.

Basic setup

You will need to have an S3 bucket and AWS credentials to run this example. Refer to the documentation for the detailed IAM permissions those credentials will need.

After you are done creating a bucket and configuring IAM settings,

you now need to create a Modal Secret. Navigate to the “Secrets” tab and

click on the AWS card, then fill in the fields with the AWS key and secret created

previously. Name the Secret s3-bucket-secret.

Modal runs serverless functions inside containers.

The environments those functions run in are defined by

the container Image. The line below constructs an image

with the dependencies we need — no need to install them locally.

We attach the S3 bucket to all the Modal functions in this app by mounting it on the filesystem they see,

passing a CloudBucketMount to the volumes dictionary argument. We can read and write to this mounted bucket

(almost) as if it were a local directory.

For the base model, we’ll use a modal.Volume to store the Hugging Face cache.

Acquiring LoRA weights

search_loras() will use the Hub API to search for LoRAs. We limit LoRAs

to a maximum size to avoid downloading very large model weights.

We went with 800 MiB, but feel free to adapt to what works best for you.

We want to take the LoRA weights we found and move them from Hugging Face onto S3,

where they’ll be accessible, at short latency and high throughput, for our Modal functions.

Downloading files in this mount will automatically upload files to S3.

To speed things up, we will run this function in parallel using Modal’s map.

Inference with LoRAs

We define a StableDiffusionLoRA class to organize our inference code.

We load Stable Diffusion XL 1.0 as a base model, then, when doing inference,

we load whichever LoRA the user specifies from the S3 bucket.

For more on the decorators we use on the methods below to speed up building and booting,

check out the container lifecycle hooks guide.

Try it locally!

To use our inference code from our local command line, we add a local_entrypoint to our app.

Run it using modal run cloud_bucket_mount_loras.py, and pass --help to see the available options.

The inference code will run on our machines, but the results will be available on yours.

LoRA Exploradora: A hosted Gradio interface

Command line tools are cool, but we can do better! With the Gradio library by Hugging Face, we can create a simple web interface around our Python inference function, then use Modal to host it for anyone to try out.

To set up your own, run modal deploy cloud_bucket_mount_loras.py and navigate to the URL it prints out.

If you’re playing with the code, use modal serve instead to see changes live.