Build your own data warehouse with DuckDB, DBT, and Modal

This example contains a minimal but capable data warehouse. It’s comprised of the following:

Meet your new serverless cloud data warehouse, powered by Modal!

Configure Modal, S3, and DBT

The only thing in the source code that you must update is the S3 bucket name. AWS S3 bucket names are globally unique, and the one in this source is used by us to host this example.

Update the BUCKET_NAME variable below and also any references to the original value

within sample_proj_duckdb_s3/models/. The AWS IAM policy below also includes the bucket

name and that must be updated.

Most of the DBT code and configuration is taken directly from the classic Jaffle Shop demo and modified to support

using dbt-duckdb with an S3 bucket.

The DBT profiles.yml configuration is taken from the dbt-duckdb docs.

We also define the environment our application will run in — a container image, as in Docker. See this guide for details.

We’ll also need to authenticate with AWS to store data in S3.

Create this Secret using the “AWS” template from the Secrets dashboard.

Below we will use the provided credentials in a Modal Function to create an S3 bucket and

populate it with .parquet data, so be sure to provide credentials for a user

with permission to create S3 buckets and read & write data from them.

The policy required for this example is the following. Not that you must update the bucket name listed in the policy to your own bucket name.

Upload seed data

In order to provide source data for DBT to ingest and transform,

we have the below create_source_data function which creates an AWS S3 bucket and

populates it with Parquet files based off the CSV data in the seeds/ directory.

You can kick it off by running this script on Modal:

This script also runs the full data warehouse setup, and the whole process takes a minute or two.

We’ll walk through the rest of the steps below. See the app.local_entrypoint below for details.

Note that this is not the typical way that seeds/ data is used, but it’s useful for this

demonstration. See the DBT docs for more info.

Run DBT on the cloud with Modal

Modal makes it easy to run Python code in the cloud.

And DBT is a Python tool, so it’s easy to run DBT with Modal:

below, we import the dbt library’s dbtRunner to pass commands from our

Python code, running on Modal, the same way we’d pass commands on a command line.

Note that this Modal Function has access to our AWS S3 Secret, the local files associated with our DBT project and profiles, and a remote Modal Volume that acts as a distributed file system.

You can run this Modal Function from the command line with

modal run dbt_duckdb.py::run --command run

A successful run will log something like the following:

Look for the 'materialized='external' DBT config in the SQL templates

to see how dbt-duckdb is able to write back the transformed data to AWS S3!

After running the run command and seeing it succeed, check what’s contained

under the bucket’s out/ key prefix. You’ll see that DBT has run the transformations

defined in sample_proj_duckdb_s3/models/ and produced output .parquet files.

Serve fresh data documentation with FastAPI and Modal

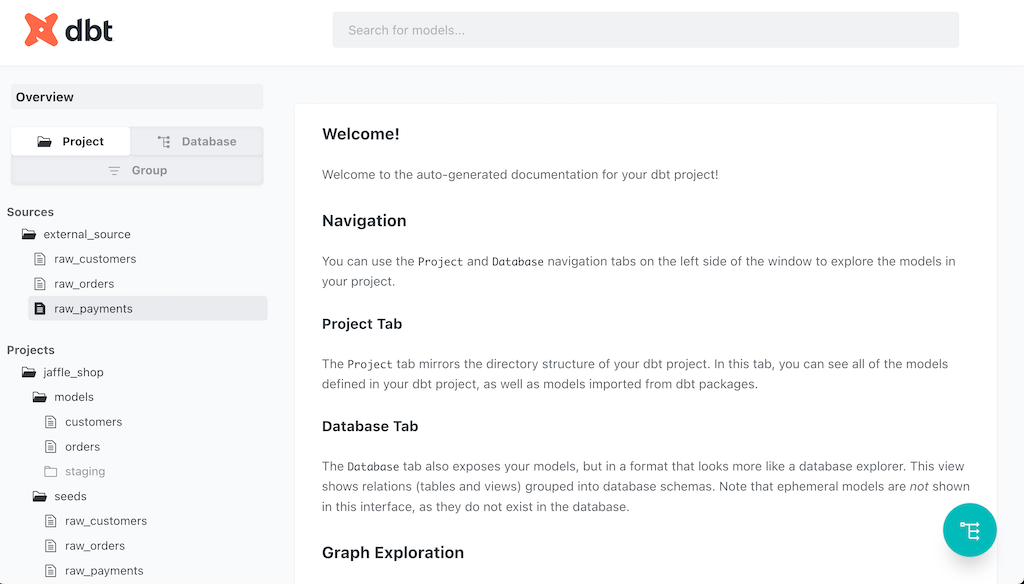

DBT also automatically generates rich, interactive data docs. You can serve these docs on Modal. Just define a simple FastAPI app:

And deploy that app to Modal with

If you navigate to the output URL, you should see something like

You can also check out our instance of the docs here. The app will be served “serverlessly” — it will automatically scale up or down during periods of increased or decreased usage, and you won’t be charged at all when it has scaled to zero.

Schedule daily updates

The following daily_build function runs on a schedule to keep the DuckDB data warehouse up-to-date. It is also deployed by the same modal deploy command for the docs app.

The source data for this warehouse is static, so the daily executions don’t really “update” anything, just re-build. But this example could be extended to have sources which continually provide new data across time. It will also generate the DBT docs daily to keep them fresh.