TensorFlow tutorial

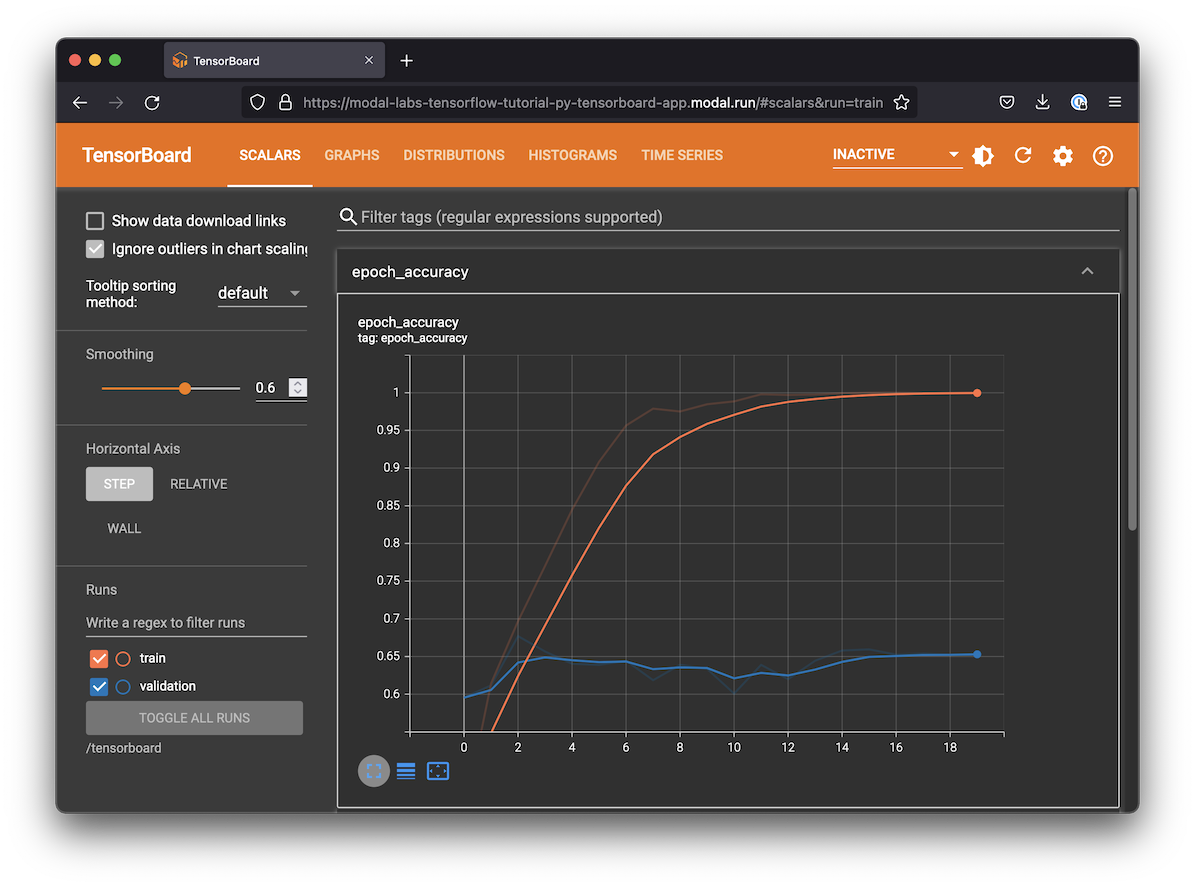

This is essentially a version of the image classification example in the TensorFlow documentation running inside Modal on a GPU. If you run this script, it will also create an TensorBoard URL you can go to to watch the model train and review the results:

Setting up the dependencies

Configuring a system to properly run GPU-accelerated TensorFlow can be challenging.

Luckily, Modal makes it easy to stand on the shoulders of giants and use a pre-built Docker container image from a registry like Docker Hub.

We recommend TensorFlow’s official base Docker container images, which come with tensorflow and its matching CUDA libraries already installed.

If you want to install TensorFlow some other way, check out their docs for options and instructions. GPU-enabled containers on Modal will always have NVIDIA drivers available, but you will need to add higher-level tools like CUDA and cuDNN yourself. See the Modal guide on customizing environments for options we support.

Logging data to TensorBoard

Training ML models takes time. Just as we need to monitor long-running systems like databases or web servers for issues, we also need to monitor the training process of our ML models. TensorBoard is a tool that comes with TensorFlow that helps you visualize the state of your ML model training. It is packaged as a web server.

We want to run the web server for TensorBoard at the same time as we are training the TensorFlow model. The easiest way to share data between the training function and the web server is by creating a Modal Volume that we can attach to both Functions.

Training function

This is basically the same code as the official example from the TensorFlow docs. A few Modal-specific things are worth pointing out:

We attach the Volume for sharing data with TensorBoard in the

app.functiondecorator.We also annotate this function with

gpu="T4"to make sure it runs on a GPU.We put all the TensorFlow imports inside the function body. This makes it possible to run this example even if you don’t have TensorFlow installed on your local computer — a key benefit of Modal!

You may notice some warnings in the logs about certain CPU performance optimizations (NUMA awareness and AVX/SSE instruction set support) not being available. While these optimizations can be important for some workloads, especially if you are running ML models on a CPU, they are not critical for most cases.

Running TensorBoard

TensorBoard is compatible with a Python web server standard called WSGI, the same standard used by Flask. Modal speaks WSGI too, so it’s straightforward to run TensorBoard in a Modal app.

We will attach the same Volume that we attached to our training function so that TensorBoard can read the logs. For this to work with Modal, we will first create some WSGI Middleware to check the Modal Volume for updates any time the page is reloaded.

The WSGI app isn’t exposed directly through the TensorBoard library, but we can build it the same way it’s built internally — see the TensorBoard source code for details.

Note that the TensorBoard server runs in a different container. The server does not need GPU support. Note that this server will be exposed to the public internet!

Local entrypoint code

Let’s kick everything off. Everything runs in an ephemeral “app” that gets destroyed once it’s done. In order to keep the TensorBoard web server running, we sleep in an infinite loop until the user hits ctrl-c.

The script will take a few minutes to run, although each epoch is quite fast since it runs on a GPU. The first time you run it, it might have to build the image, which can take an additional few minutes.