Product updates: GPU memory snapshots, notebooks, service tokens, and more

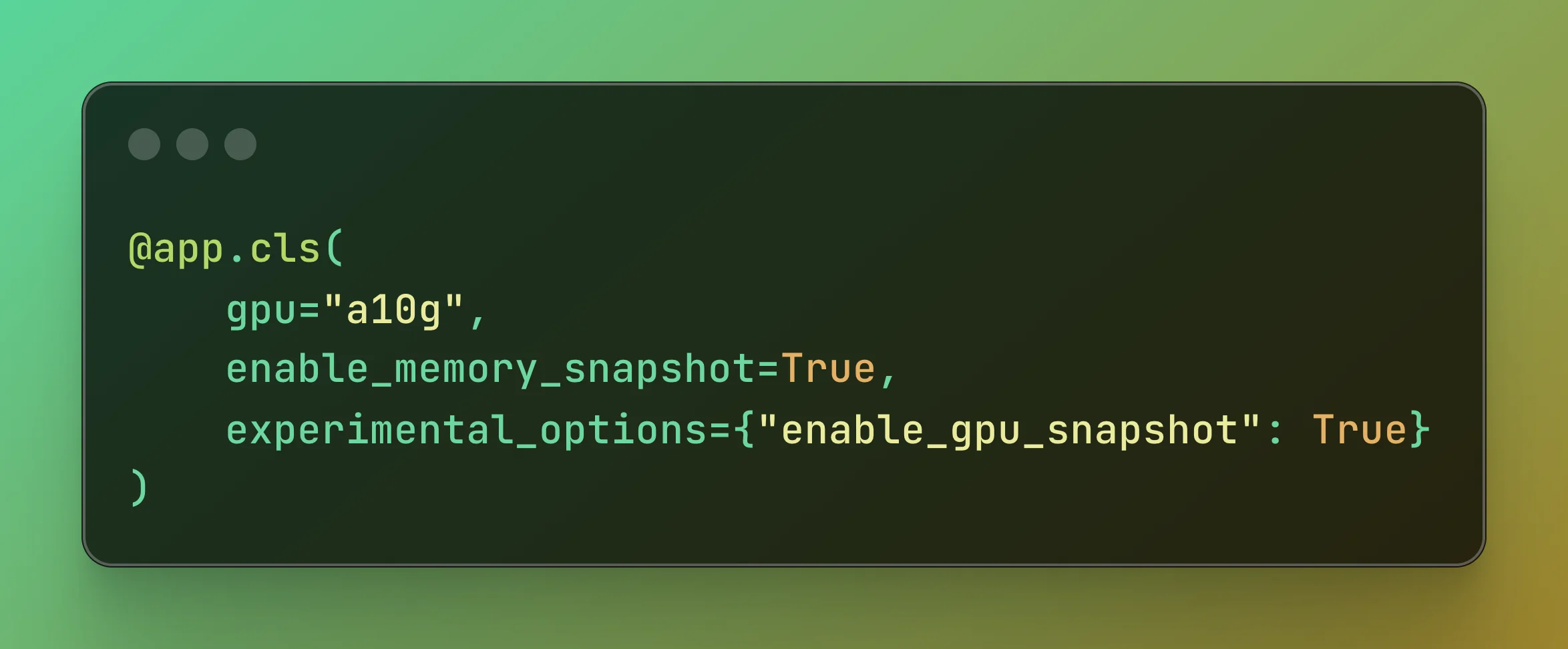

📸 GPU Memory Snapshots (Alpha)

We’ve brought the magic of memory snapshots to GPUs on Modal! You can now enjoy 10x faster cold boots for GPU workloads by skipping expensive steps like Torch compilation. If you’re already using memory snapshots, just add the enable_gpu_snapshot experimental option to get started.

Check out our blog post for more info.

📓 Introducing Modal Notebooks (Beta)

Notebooks allow you to write and execute Python code in Modal’s cloud, within your browser. Since it’s backed by Modal’s serverless platform, pay only for the compute you use. Attach custom images and distributed volumes, collaborate in real time, and share your work on the web.

To get started, open modal.com/notebooks in your browser, or check out our docs.

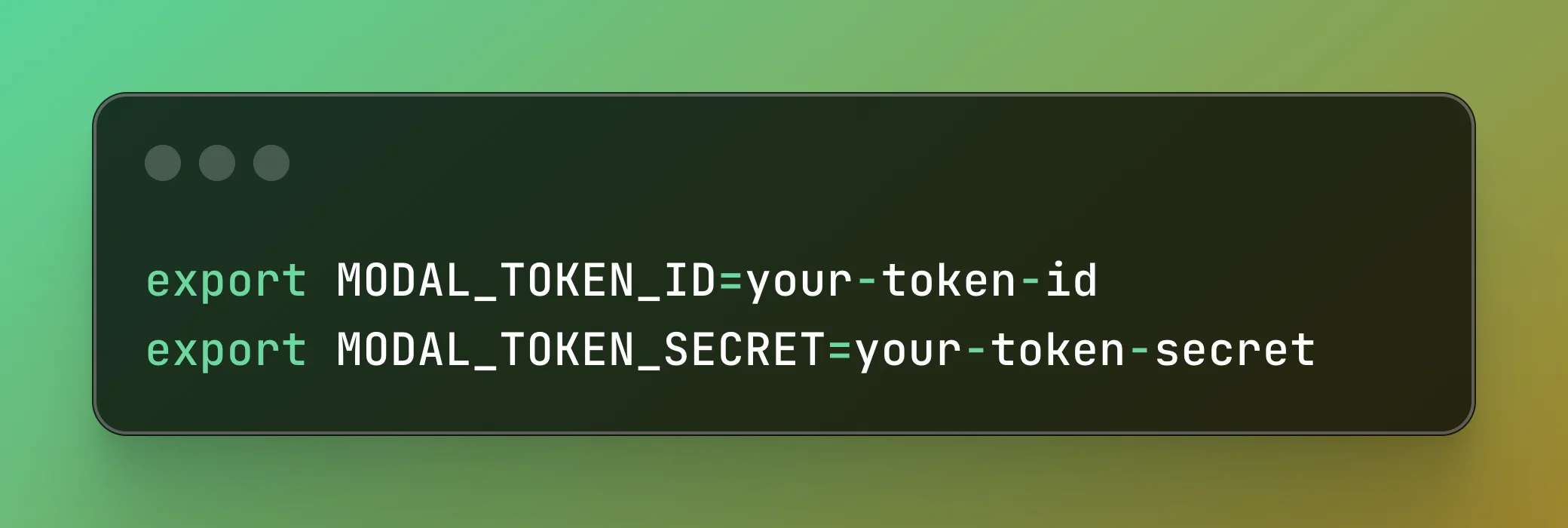

🤖 Service Tokens

Modal workspace managers can now create Service Users that have their own API tokens. This makes it easy to automate deploys of Modal apps without relying on a specific member’s tokens.

More details in our docs here!

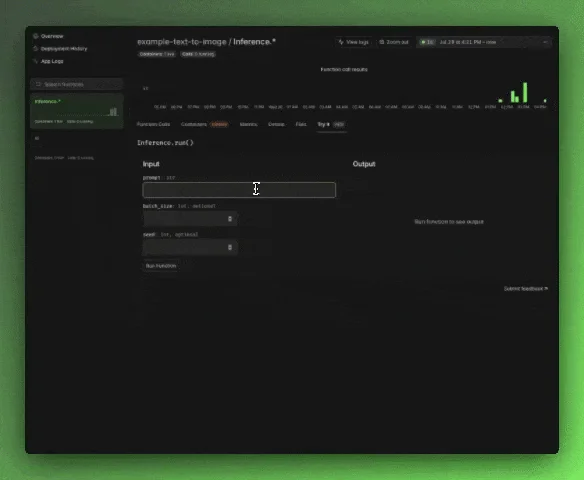

🏃 Run Modal Functions from the browser (Beta)

Modal now generates a UI for your Function based on its type annotations! Use it for easier testing and debugging, or as a user-friendly way to trigger internal workflows.

Note: App has to be deployed with modal client version 1.1 or later, and all function arguments need to have type annotations specified

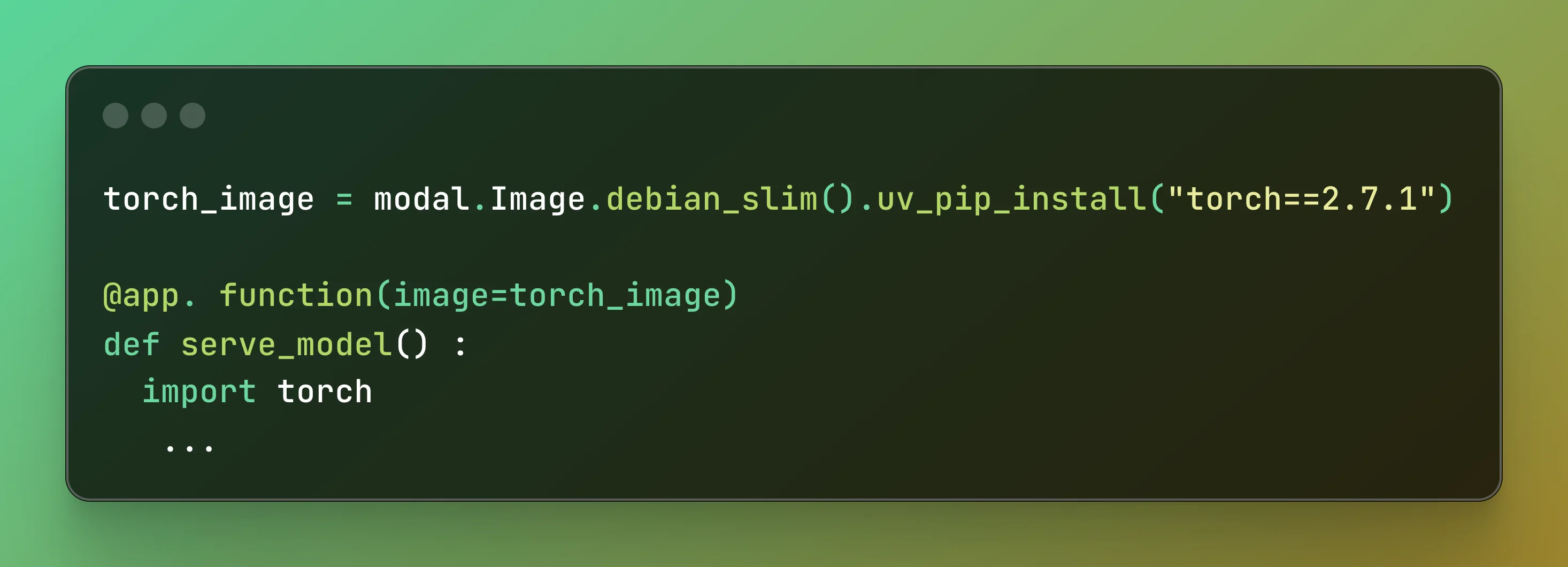

🧩 First-class Support for uv

Make your container builds even faster on Modal with uv. Use uv_sync to sync your Modal image with your local project and uv_pip_install to install packages lightning fast.

Read more about it in our changelog.

👩💻 Client updates

Run pip install --upgrade modal to get the latest client updates. Here’s some highlights from the changelog:

- Introduced the concept of “named Sandboxes” for use cases where Sandboxes need to have unique ownership over a resource (1.1.1)

- Added a

.nameproperty and.info()method to tomodal.Dict,modal.Queue,modal.Volume, andmodal.Secretobjects (1.1.1) - Introduced support for the

2025.06Image Builder Version (1.1.0). These improvements should greatly reduce the risk of conflicts with user code dependencies. They also allow Modal Sandboxes to easily be used with existing Images or Dockerfiles that are not themselves compatible with the Modal client library.

🏎️ Run OpenAI’s gpt-oss model with vLLM

With our new gpt-oss example, you can deploy OpenAI’s new open-weight reasoning model on Modal in minutes using vLLM. Check it out here!

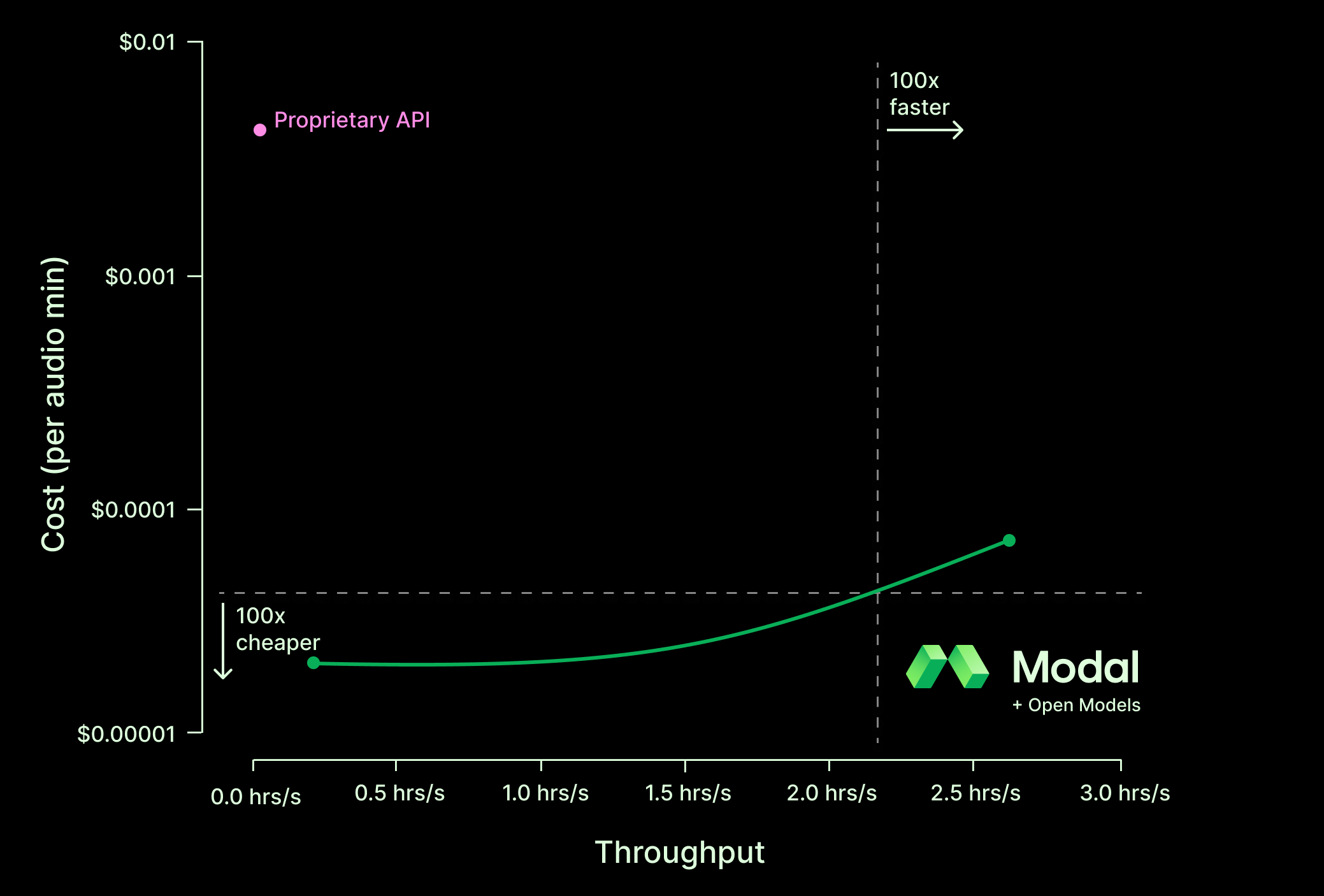

🔉 Transcribe a week of audio in a minute for a dollar

ICYMI, open speech-to-text models are really good now. Learn how to deploy an audio transcription service that is over 100x faster or 100x cheaper than proprietary APIs in our blog post here.

❤️ Customer spotlights: Lovable, Marimo, Pipecat

- Lovable uses Modal Sandboxes at massive scale to run LLM-generated code for thousands of apps. During a recent promotional weekend, Modal spun up >1M Sandboxes for them. Read the full case study here.

- Marimo, an open-source Python notebook, launched their cloud-hosted notebook workspace on Modal Sandboxes.

- Pipecat, an open-source real-time voice AI framework, trained their new turn detection model on Modal.

📅 Recent events

- We recently hosted 100+ builders at a Voice AI event with Daily, featuring a panel with researchers at NVIDIA and Mistral AI building state-of-the-art speech models. Missed it? Watch the livestream here!