The fastest way to scale Inference

“The beauty of Modal is that you can scale inference to thousands of requests with just a few lines of Python.”

“We use Modal to run edge inference with <10ms overhead and batch jobs at large scale. Our team loves the platform for the power and flexibility it gives us.”

Code-first inference

Stay in your application code. Modal handles scaling, serving, and infrastructure behind the scenes.

Ship inference for 1M+ users

from day one

Defined in code

Define your inference function with Modal’s SDK. Easily keep ML dependencies and GPU requirements in sync with application code.

Low latency

Modal’s container engine launches GPUs in <1 s when your inference function is called. Load a 7B model in seconds with our cutting-edge GPU snapshotting.

Elastic scale

Instantly scale to 1000+ GPUs during traffic spikes, then back down to 0 when idle. No commitments, no waits.

Infrastructure optimized for every deployment pattern

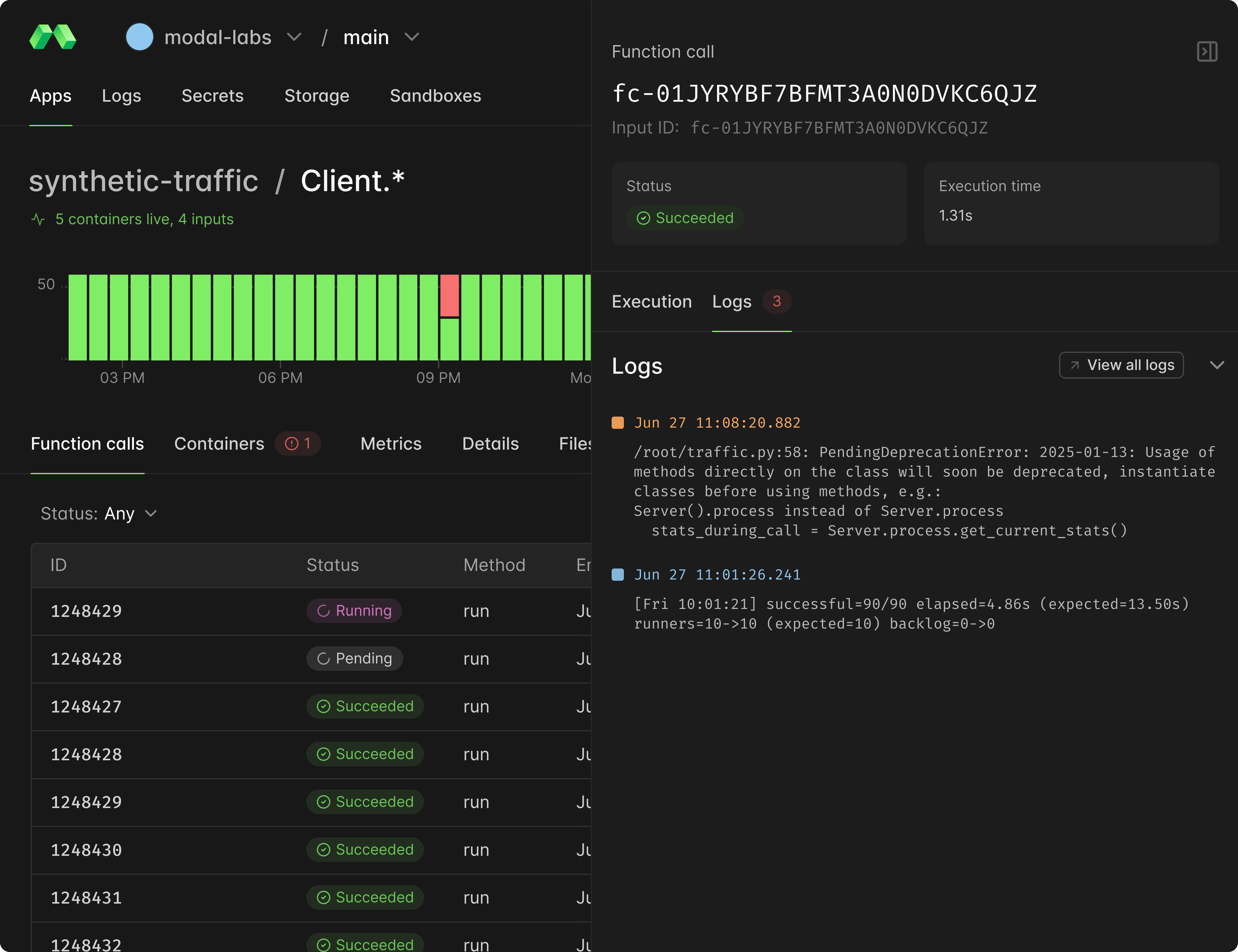

Get clear insight into production deployments