GLM-5 is available to try on Modal. Get started

Modal Core Platform

Cloud infrastructure designed for AI workloads

Every layer of Modal’s platform is engineered to give you the tools to build robust, scalable data applications.

Fast cold starts. Faster feedback loops.

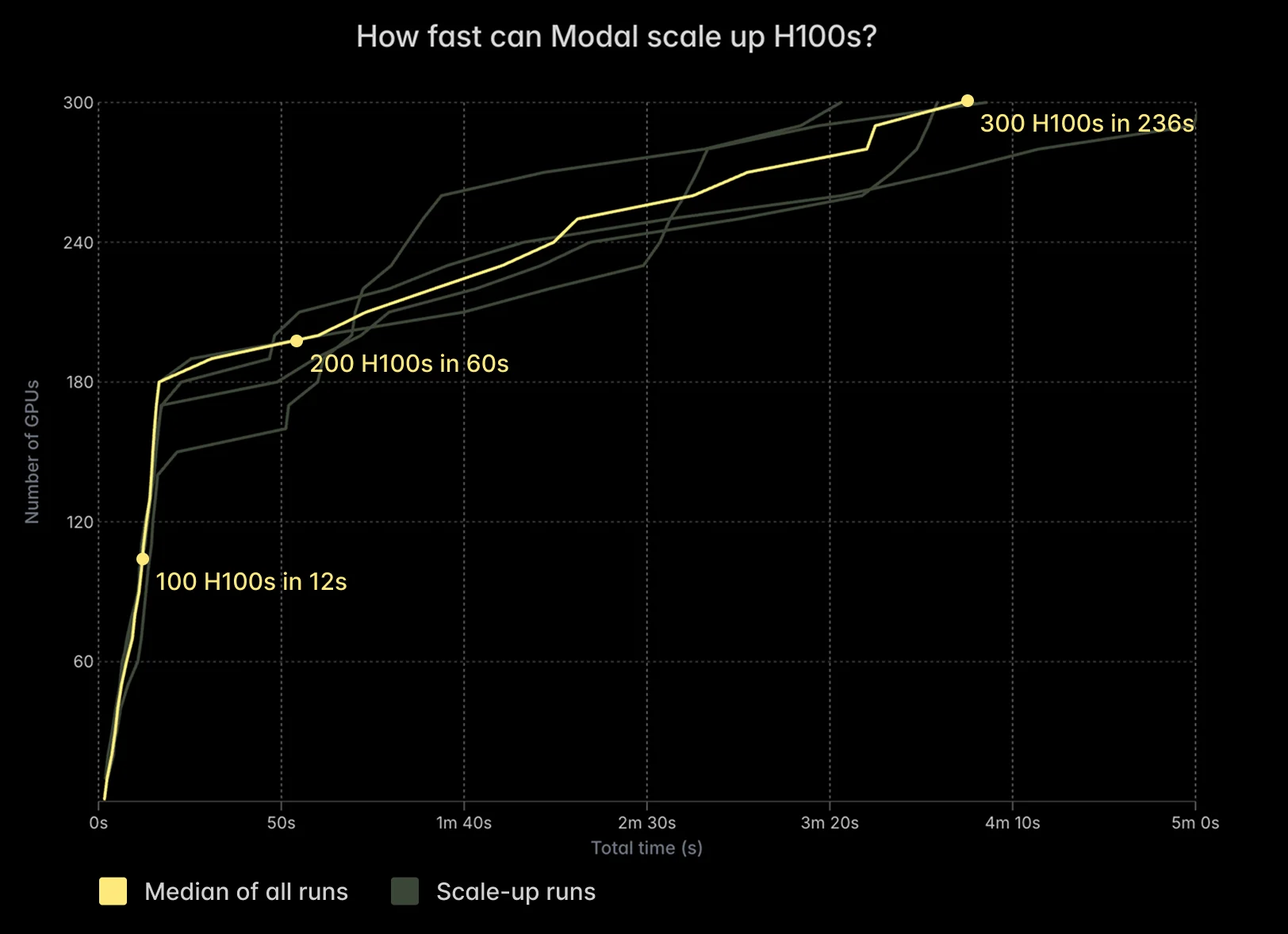

Scale to 1000+ GPUs in minutes. Then back down to zero.

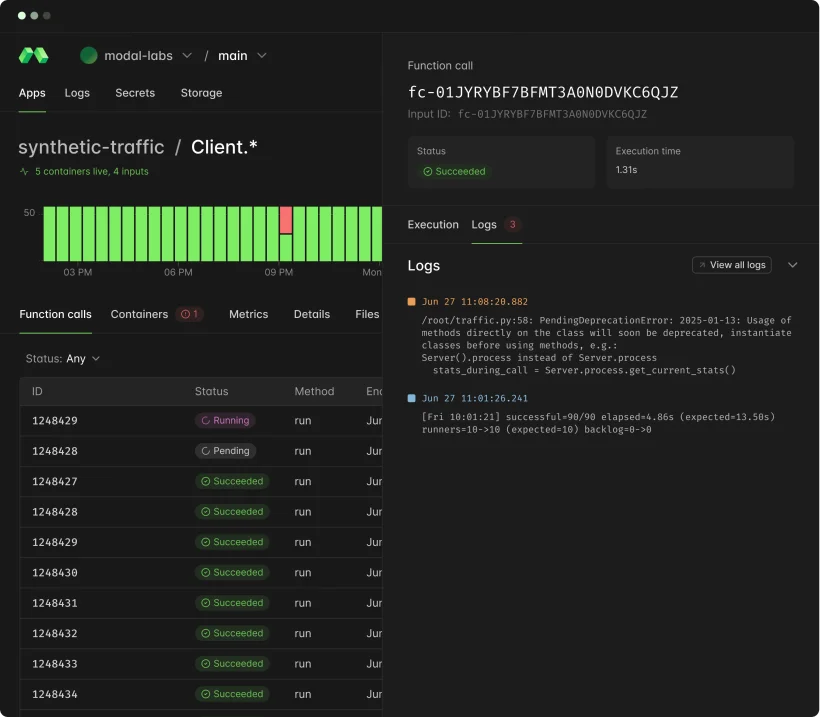

Observability as a first-class feature.