GLM-5 is available to try on Modal. Get started

Modal Training

Train more, configure less

Launch more experiments and training jobs. Spin up single-node experiments or scale to multi-node GPU training instantly.

“Modal lets us deploy new ML models in hours rather than weeks. We use it across spam detection, recommendations, audio transcription, and video pipelines, and it’s helped us move faster with far less complexity.”

Mike Cohen, Head of AI & ML Engineering

Modal Training

Where researchers can run

experiments, not ops

Define in code

Define your training function with Modal’s SDK. Easily keep ML dependencies and GPU requirements in sync with application code.

01

02

03

04

05

06

07

08

09

10

11

12

13

14

Native storage

Ingest training data from anywhere: Modal’s distributed Volumes, cloud buckets, or your local filesystem.

01

02

03

04

05

06

07

08

09

10

11

12

13

14

15

Sub-second startup

Modal’s container stack launches GPUs for your function in < 1s. Fan out experiments to accelerate your research.

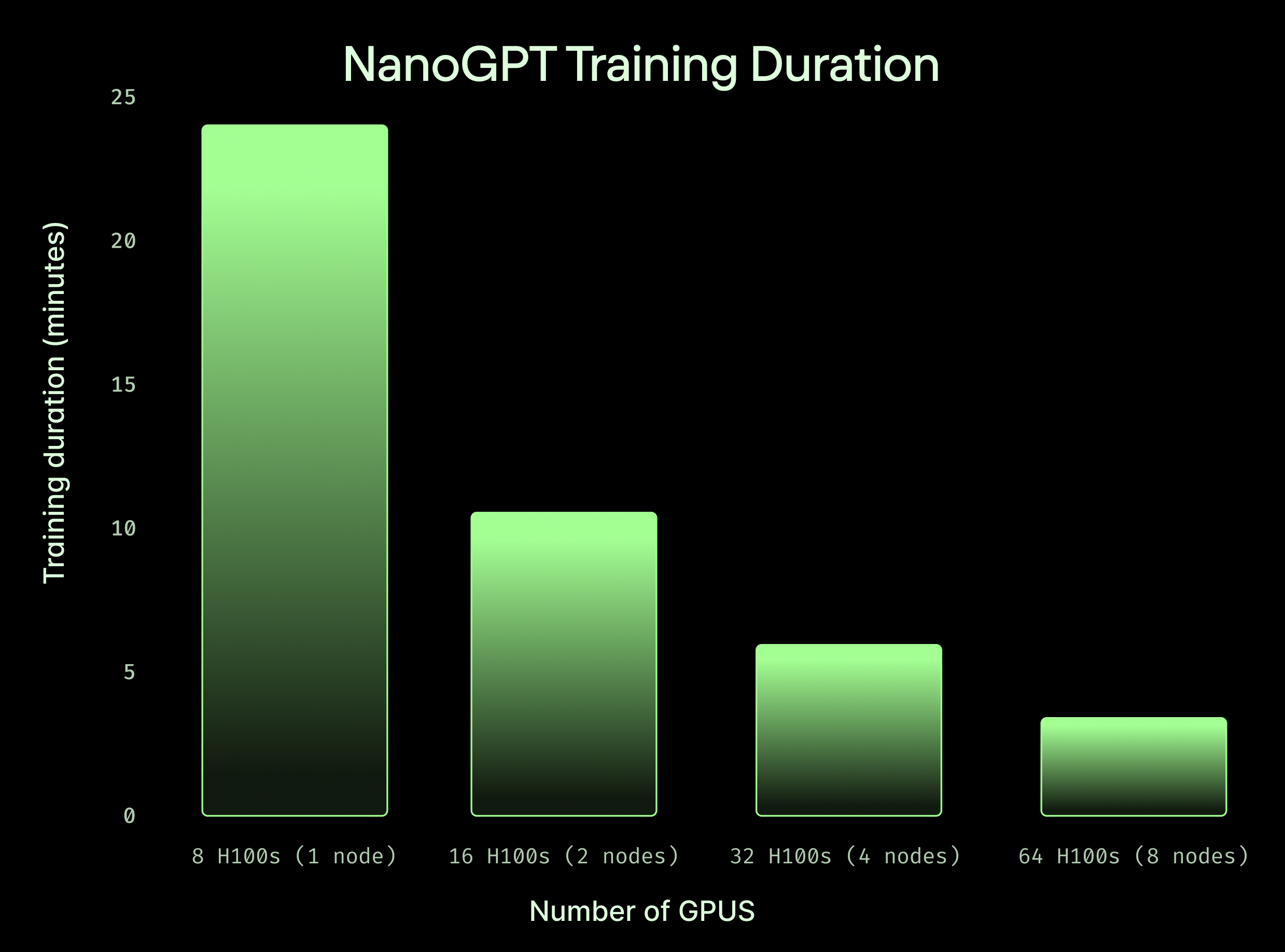

Speed up training jobs by going multi-node

Scale from 1 GPU to 64 with just one line of code

Spin up a cluster in a second with no minimum commitments

B200, H200, and H100 clusters equipped with Infiniband and private networking