How to deploy Whisper to transcribe audio in seconds

In the past few years, AI/ML models have gotten so good that you can transcribe any good audio (and even some bad stuff) for basically zero cost and in seconds. That’s what we’re going to do here, transcribing a podcast using a few lines of code, the OpenAI Whisper model, and an H100 on Modal.

What is the Whisper AI Model?

The Whisper AI model is OpenAI’s open-source automatic speech recognition (also called speech-to-text) system that can transcribe audio in over 100 languages with incredible accuracy. Whisper is trained on 680,000 hours of multilingual data and available in multiple sizes from tiny (39M parameters) to large (1.5B parameters).

At its core, Whisper uses a transformer-based encoder-decoder architecture. When you feed it audio, the model takes 30-second chunks and encodes the raw waveforms as spectrograms, which are visual maps of how sound frequencies change over time.

The decoder generates text tokens one at a time. It uses attention mechanisms to figure out which parts of the audio matter for each word it’s writing. The whole system does a bunch of things at once:

- Language detection on the fly: feed it audio in French, Japanese, or English, and it figures it out

- Timestamp alignment: it knows not just what was said, but when it was said

- Multi-task training: translation, voice activity detection, and transcription all happen in the same pass

What makes Whisper so good at handling real-world audio is the sheer variety of stuff it was trained on. Podcasts, YouTube videos, audiobooks, and random conversations recorded in every possible acoustic condition. Got background noise? Heavy accent? Technical jargon? Whisper’s probably heard something similar before. The model even knows the difference between someone talking and background music or dead air, thanks to special tokens that mark non-speech events.

Ways to deploy Whisper

Since Whisper is open-source, there are many ways to use it. The simplest is to use Whisper via a hosted endpoint—for example, through OpenAI directly or via a 3rd party like Amazon Bedrock. On the other end of the spectrum, you could self-host Whisper using EC2 or GCE. This would require that you manually handle scaling, GPU capacity, and other infrastructure concerns.

In recent years, cloud platforms purpose-built for deploying AI models have become popular as well. Modal, for example, handles scaling and efficient allocation of GPU capacity while still giving developers full control of what model weights and inference logic they want to use. The rest of this tutorial shows you how to deploy Whisper on Modal.

How to deploy Whisper in minutes

Let’s transcribe. If you don’t have a Modal account, head here to sign up. You get $30 of GPU credits every month, so following this tutorial will be entirely free.

Then we’ll set up modal locally:

The above commands create a new project directory, set up a Python virtual environment to keep your dependencies clean, and install Modal (for GPU compute) and requests (for downloading audio files).

Next, you’ll need to authenticate Modal on your machine:

This opens your browser to grab an API token. Once that’s done, you’re ready to write the actual transcription code.

Here’s the full code we’ll use:

This is pretty brief for how powerful it is. Let’s walk through it.

Setting up the Modal app and container image

First, we create a Modal app and define the container image that’ll run on the GPU. Modal handles all the infrastructure complexity, you just specify what packages you need. No config files necessary, as all your dependencies can be defined in-line with your application code. We’re using a slim Debian image with Python 3.12, and installing Whisper plus librosa (for audio processing). The uv_pip_install conveniently uses uv under the hood for fast installs.

The entry point that runs locally

This function runs on your local machine, not the GPU. It downloads the audio file from whatever URL you provide, then ships the audio bytes to the GPU for transcription. The .remote() call is where the magic happens, sending your audio to Modal’s infrastructure and getting back the transcript. For a deployment in production, you can invoke the Modal function via HTTP, WebSocket, and more.

The GPU-accelerated transcription class

The @app.cls decorator tells Modal to run this class on an H100 GPU using our container image. The @modal.enter() method runs once when the container starts up, loading the Whisper model into memory. This happens before any transcription requests, so you’re not wasting time loading the model for each audio file.

Notice we’re using the “base” model here. You can swap this for “tiny”, “small”, “medium”, “large”, or “large-v3” depending on your accuracy needs and speed requirements. Larger models are more accurate but slower.

The actual transcription logic

This method takes the raw audio bytes and converts them into something Whisper can understand. Librosa loads the audio and resamples it to 16kHz (Whisper’s expected sample rate), then we pass it to the model for transcription. The model returns a dictionary with the text, timestamps, and other metadata, but we just grab the text here.

To run this on your own podcast, save the code to transcribe.py and run:

The first run takes longer as Modal caches the container image for the first time, but after that, you’ll get transcriptions in seconds to minutes depending on the audio length and model size.

Transcribing a podcast

Let’s do a real-life run through. We’ll choose The Rest is History podcast, and we can grab the raw MP3 audio file URL from Podchaser. We’ll choose the recent Mad victorian sport episode.

Here’s what we’ll run:

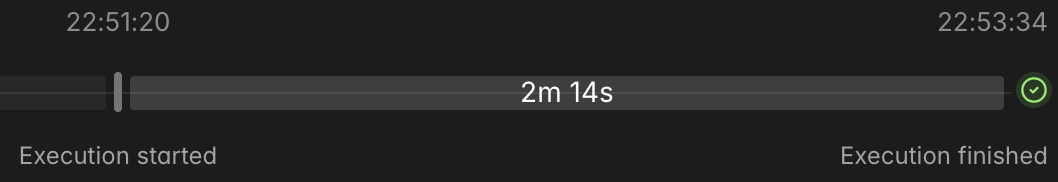

Because this is the first time we’ve created this image, we get about a minute of initial build. But then we’re into the actual model run, which takes 2m14s:

The podcast audio is 57m50s, so we are transcribing about 26 minutes of audio per minute of processing time.

Here’s the start of the transcription:

Thank you for listening to The Rest is History. For weekly bonus episodes, add free listening, early access to series and membership of our much-loved chat community, go to TheRestisHistory.com and join the club, that is TheRestisHistory.com. This episode is brought to you by US Bank. They don’t just cheer you on, they help every move count. With US Bank’s smartly checking and savings account to help you track your spending and grow your savings, your finances can go further. Because when you have the right partner on your side, there’s no limit to what you can achieve. That’s the power of us.

Looks pretty good. The entire transcription is 10,511 words long, and it cost $0.11, so the cost per word was $0.0000105, or about 0.001 cents per word. To put that in perspective, if you transcribed a million words (about a 4-day long podcast), it would cost you about $10.50. Still free with credits!

What other models can I use for transcription?

Since Whisper was released, there’s been an explosion of new open-source ASR models. Some recent ones have garnered much excitement for exhibiting higher throughput, higher accuracy, and/or better real-time support than Whisper (e.g. Kyutai STT, NVIDIA Parakeet, Mistral Voxtral). Check out our blog post on how to get 100x faster and cheaper batch transcription using Parakeet + Modal compared to proprietary ASR providers.

Ready to build with Whisper or any other AI model? Sign up for Modal and get $30 in free credits. Whether you’re running open-source models or your own custom models, Modal give you instant access to thousands of GPUs, from T4s to B200s. No waiting for quota, configuring Kubernetes, or wasting money on idle costs—just fluid GPU compute you can attach to your inference code.

Deploy Whisper