What is an AI code sandbox?

The recent surge in LLM-generated code has revived a popular environment: code sandboxes.

Nowadays, a significant portion of code intended for production is written by non-deterministic systems, notably IDE-integrated AI agents. While this promises a massive boost in developer productivity, it also increases the risk of critical errors and security holes. While LLMs are proficient at coding, they are unpredictable and can make mistakes. Today’s AI-assisted engineering efforts need a safe environment that can isolate and execute generated code, containing the blast radius of a costly mistake.

This isolated environment is known as an AI code sandbox.

What are code sandboxes?

Code sandboxes have a long, curious history. The earliest version of a code sandbox was found in Unix systems from the 1980s: chroot, a program that would alter the root directory of a running process, blocking it from accessing files outside of the designated file system. But the term sandbox only emerged when the Java VM made it possible to run untrusted applets in a bytecode verifier. Since then, it’s existed as a feature in various runtimes. Today’s younger developers probably attribute the phrase code sandbox to JSFiddle, a super popular 2009 project that made it easy to test JavaScript, HTML, and CSS files.

Technically speaking, code sandboxes never disappeared. But from a branding standpoint, they were somewhat absorbed into the sprawling, dominant category of containerized architecture. In recent months, however, there’s been a resurgence of interest in sandbox solutions.

AI coding agents and the revival of sandboxes

Soon after OpenAI released GPT-3, there was ample public amazement at the language model’s ability to write detailed code. At the time, it was mainly Twitter users posting React snippets manually generated with ChatGPT. There was also some skepticism about whether AI would actually make its way into the industry developer ecosystem.

Fast-forward two years, and most software businesses are using LLMs to generate code. They’re doing so via dedicated AI agents that directly integrate with popular software developer IDEs like VSCode. Popular examples of AI coding agents include Cursor and Windsurf. The advantage of these tools is their straightforward UI and UX, where they’re more comparable to a programming partner than an independent SaaS product. These tools are a hit; while likely exaggerated, there are reports that 97% of developers are actively using AI coding tools.

This surge in AI coding agents gives rise to two contrasting forces. The first is that software development is radically accelerating, with features (or entire apps) being “vibe-coded” in the span of a day. The second is that the proliferation of code—especially code created by models that routinely hallucinate entire packages—can lead to a proliferation of errors. The errors can cause a multi-tenant environment to crash, impacting other users. In worst case scenarios, a bad actor can prompt inject an LLM to create an intentional security vulnerability to exploit; for AI agents with long memory, this hack could happen even if the immediate user has good intentions but is oblivious to the agent’s poisoned context.

These risks are mitigated by isolating LLM-generated code’s execution, preventing it from crashing an environment or breaching other programs. AI code sandboxes are dynamic environments that can ephemerally containerize code at runtime.

How do AI code sandboxes actually work?

There are a couple different approaches to implementing AI code sandboxes under the hood. Either VMs or containers can be used as the underlying compute environment. On top of that, there needs to be an interface to quickly spin up these compute environments, sync over the code to be executed, and communicate with the internal processes.

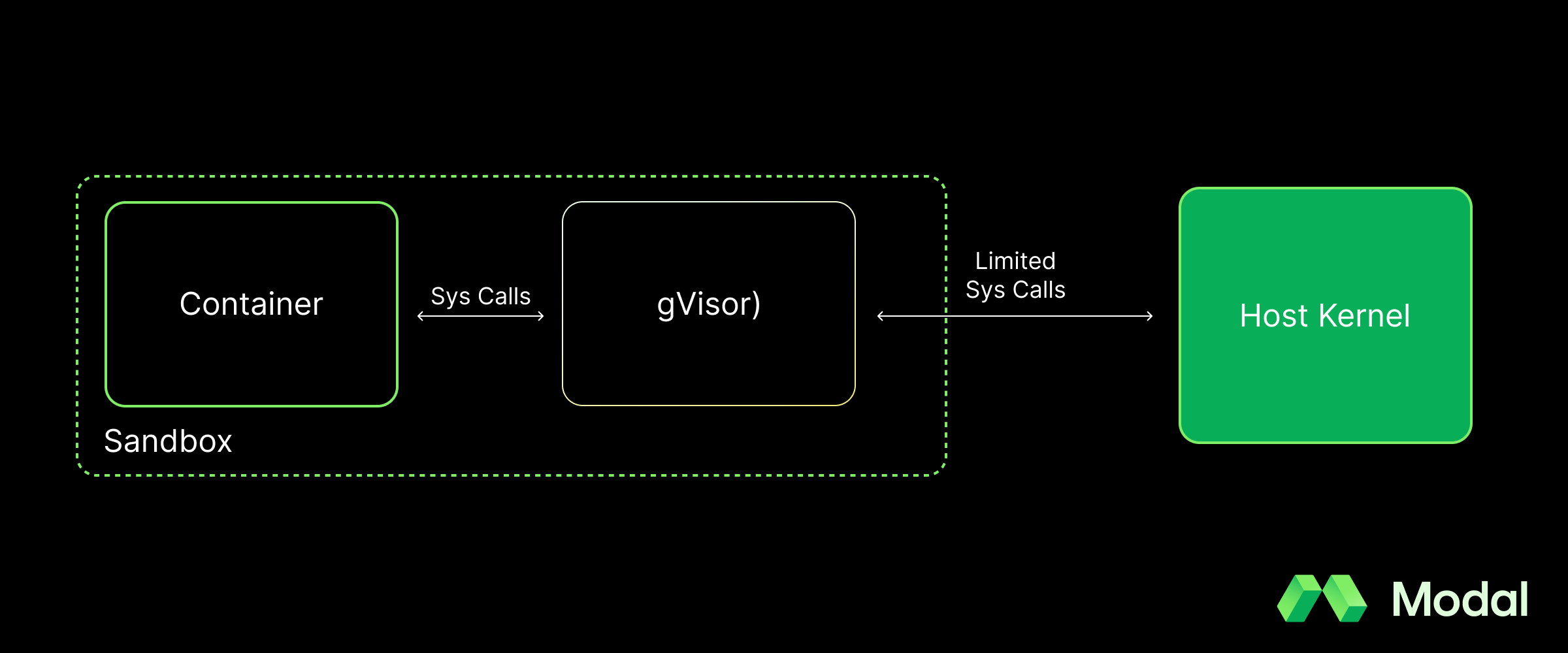

It is critical to ensure that the underlying compute environment is airtight—that is the flagship purpose of sandboxes. This is where technologies like gVisor come into play. gVisor is a secure container runtime that is specifically designed for running untrusted code in production environments. Unlike standard container runtimes like runc, gVisor intercepts syscalls from guest processes, providing a virtual kernel to the sandboxed container. This prevents these processes from having any ability to affect the host (see: Dirty Pipe vulnerability) outside of the permissions gVisor specifically gives them.

Beyond the compute environment, there are a variety of additional features needed to make sandboxes usable. This includes:

- Filesystem APIs to sync data in and out of the sandbox

- Networking primitives to enable and control communications with other servers

- An orchestration layer to provision instances, scale resources, and manage the sandbox lifecycle

- Filesystem and/or memory snapshotting to save and restore sandbox state

Build vs buy

Hypothetically, a company could build its own AI code sandbox infrastructure by leveraging Kubernetes and open-source isolation environments like gVisor. This, however, would induce a lot of operational cost to build and maintain. Container cold starts would also be unoptimized without additional effort, making it slow to both iterate on and serve end users. Making the system scalable to millions of concurrent sessions would be another massive lift.

For these reasons, most companies prefer a managed AI code sandbox service that enables fast developer velocity and reduces cost of ownership.

What are the use cases for AI code sandboxes?

There are multiple real-world problems where AI code sandboxes are particularly applicable. Some of these problems are pertinent to the recent wave of AI-focused companies. Others are problems that companies might face when using AI-integrated engineering products. In either scenario, AI code sandboxes provide a critical layer of isolation to protect their infrastructure.

Let’s discuss some of these real-world problems in detail.

Background coding agents

Today, many developers use an AI coding plugin like Cursor or Windsurf; others are using AI-native IDEs like Devin by Cognition. These companies have all introduced background coding agent products, which need to clone a developer environment, modify code, and execute it to effectively test changes. This is similar to how developers use dedicated continuous deployment (CD) systems to build an application before deploying it to staging or production nodes. There’s an infinite variety of contexts for which coding agents are writing code, which means they benefit from sandboxes that support dynamically-defined environments.

Code reviews

Sometimes, teams opt to run linters or other tests that evaluate if an application can be safely built. Code reviewing platforms require AI code sandboxes because the test code, whether AI-generated or human written, is produced externally and cannot be trusted. For code reviewing products, AI code sandboxes can be spun up to run the tests and produce the results before being discarded.

LLM code interpreters

Many AI chatbots, like ChatGPT, will write code and test it on AI-generated tests to validate if the code results in expected behaviors. For example, Quora’s new chatbot platform Poe has chatbots that can write and evaluate code. In other cases, AI chatbots might write and execute code to answer a user prompt that requires analysis. In all of these scenarios, the LLM code interpreter needs a safe, ephemeral environment for execution.

Reinforcement learning

In reinforcement learning (RL), an agent receives information about its current state and takes a next action that maximizes expected award. During the learning process, the agent’s model weights are updated to favor actions that are likely to result in a higher reward.

In the context of training code generation agents, sandboxes are used to safely execute AI code and score the outputs via tests. The results are then fed back to the model as a reward signal. This requires bursting up to many sandboxes at once to run evals in parallel. Without this parallelization, the training process is bottlenecked on CPU-dependent steps, causing GPU utilization to suffer.

AI-generated apps

There’s an emerging category of tools that generate entire applications with agents. Examples of this include Lovable (a Modal customer!), Replit, and even Webflow. However, because huge volumes of code are written by the underlying AI agent, executing this generated code is unsafe for the host application’s stack (e.g. Lovable’s servers) unless it’s done in a sandbox.

What features are important for AI code sandboxes?

There are several AI code sandbox vendors that exist, including Modal! When evaluating solutions, developers should check for several must-have features:

Fast cold starts

For agentic or on-demand coding workflows, every second counts. Sandboxes that cold start in under a second enable near-real-time interactivity, which is important for applications like AI pair programming or dynamic app generation.

For internal use cases, the cold start metric may matter less than how many concurrent sandboxes you can scale to. That said, there’s still a big difference to internal productivity between a 5-second lead time and a minute. At the end of the day, cold start length can be a large contributor to overall latency.

Strong isolation and security

Given that AI code sandboxes are often leveraged for security guarantees, a vendor’s security posture is of paramount importance. First and foremost, you should evaluate the kernel-level isolation system. Some systems are more robust than others (for example, gVisor creates an application-specific kernel while runc just uses the host kernel). You should also look for built-in egress control features, lest you want to build those yourself. Finally, for production-grade applications, you’ll want a vendor that has a proven track record with compliance standards like SOC 2 and HIPAA.

Elastic, high-concurrency scaling

Some products, such as Modal, can spin up thousands of containers in seconds. If you’re running evals across a matrix of inputs or exposing a product to millions of active users, fast scalability is key for a good user experience.

Dynamic runtime environments

Often times AI-generated code needs a specific environment to run in. Most vendors will require you to bring and manage your own set of Docker images, but this doesn’t work well if you’re evaluating code that has dependencies that are not known until generation time. Modal specifically offers more flexibility with Python-defined images, which means you can dynamically define sandboxes at runtime.

Filesystem and memory snapshotting

There are certain use cases where saving and then restoring a sandbox’s state can be extremely useful. For example, you might want to back up your sandbox’s state for debugging, run large-scale fan-out experiments, or branch the current state to test different code changes independently. To reduce cold start latency, look for providers that optimize how they cache and restore snapshot images.

Built-in networking primitives

Good sandboxes let you expose live endpoints via tunnels—for example, to power a browser-based REPL or webhook tester. This isn’t essential for all workloads, but it can save hours of plumbing if your use case relies on streamed data (e.g. virtual shell, video files, live market data) over UDP. It also keeps sandboxes closer to real-world deployments.

On the security front, you will also want an easy way to control outbound networking.

Developer-first workflow

The best sandboxes feel like an extension of your local dev environment. Look for tools that require minimal boilerplate and have clean SDKs and infra-as-code patterns. If your team values iteration speed and hates dealing with Dockerfiles and YAML, this matters.

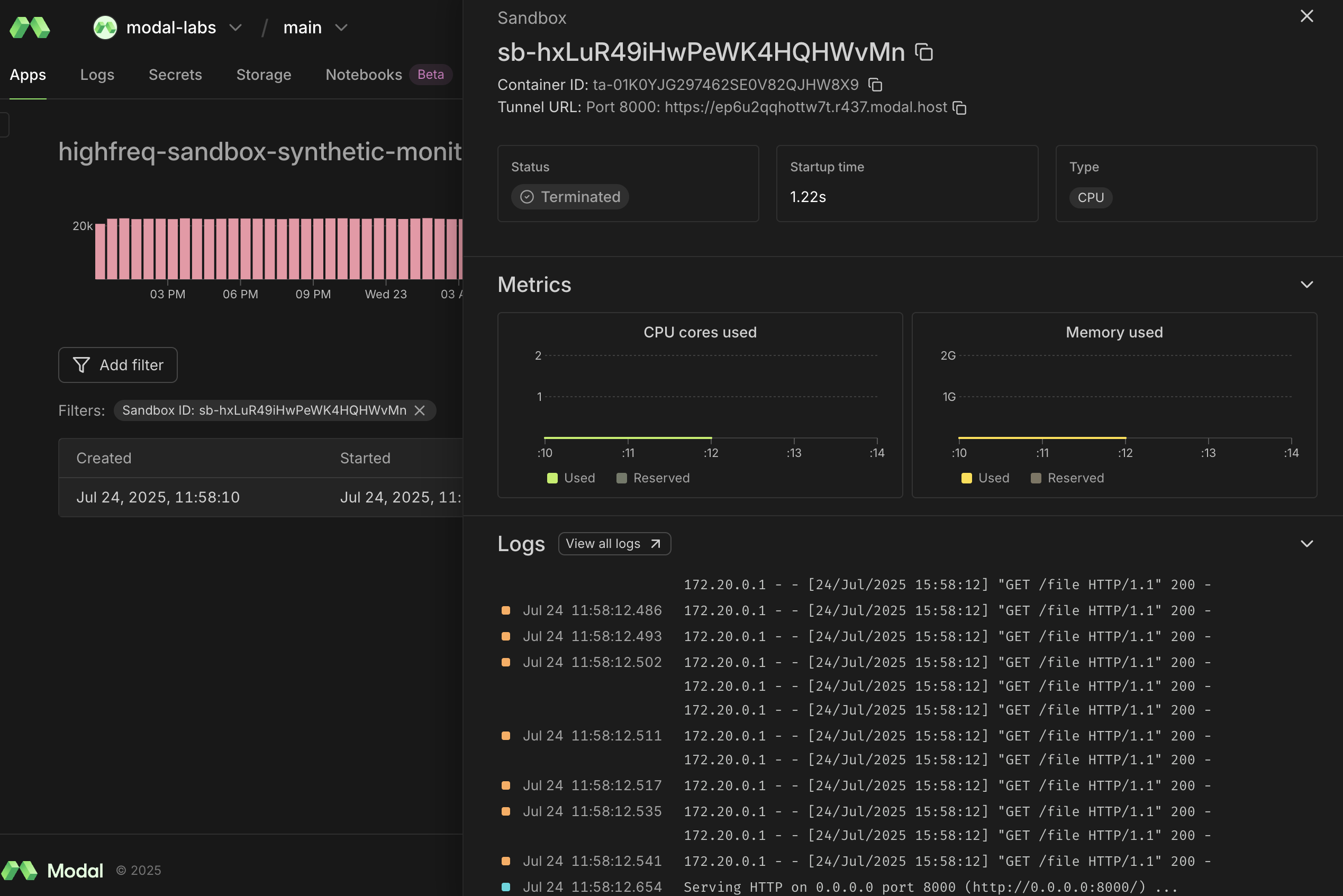

For production use cases, having granular observability features for debugging is important, too. You will want dashboards, metrics, and logs that are easy to parse down to the individual sandbox level.

A closing thought: we’re building in this space

If it wasn’t already obvious, we have a dedicated AI code sandbox product. It’s used by companies like Lovable and Quora at massive scale.

Modal Sandboxes deliver sub-second cold starts and rapid scaling through our proprietary container stack. On top of that, we’ve built a rich layer of features for networking, filesystem access, and snapshotting to help you ship your AI coding features faster!

Interested in trying it out? Simply sign up and run the snippet below:

import modal

app = modal.App.lookup("sandbox-manager", create_if_missing=True)

sb = modal.Sandbox.create(app=app)

p = sb.exec("python", "-c", "print('hello')")

print(p.stdout.read())

sb.terminate()