Best frameworks for fine-tuning LLMs in 2025

In this article, we cover the state-of-the-art frameworks for fine-tuning large language models (LLMs) in 2025. These frameworks make it faster, cheaper, and simpler to fine-tune models like LLaMA 3/LLaMA3.1, Mistral, Mixtral, or Pythia on your own data.

Table of contents

What are Axolotl, Unsloth, and Torchtune?

Axolotl, Unsloth, and Torchtune are three of the most popular frameworks for fine-tuning large language models.

These frameworks simplify the fine-tuning process. Users can easily apply state-of-the-art optimization techniques to fine-tuning open weights models to their specific datasets without needing to implement training procedures from scratch.

They are also generally designed to optimize the fine-tuning process, potentially offering faster training speeds and reduced memory usage.

Takeaways

- If you are a beginner: Use Axolotl.

- If you have limited GPU resources: Use Unsloth.

- If you prefer working directly with PyTorch: Use Torchtune.

- If you want to train on more than one GPU: Use Axolotl.

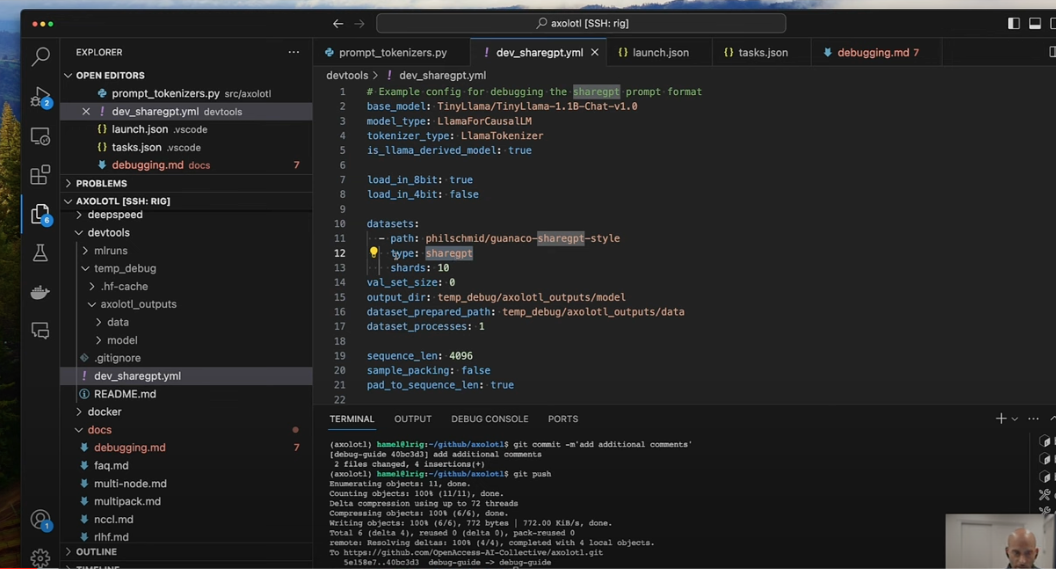

Axolotl

Axolotl is a wrapper for lower-level Hugging Face libraries like Transformers, retaining most of the granular control they offer while being much easier to use. It frees you up to focus more on your data rather than the technical details of the fine-tuning process.

Axolotl comes with lots of built-in default values and optimizations, reducing the need for manual configuration. The tool includes clever features like sample packing, which can improve training efficiency.

With Axolotl, you can train open weights models like LLaMA 3/LLaMA 3.1, Pythia, and Falcon, all available on Hugging Face.

You should consider using Axolotl if you don’t want to get too deep into the math of LLMs and just want to fine-tune a model.

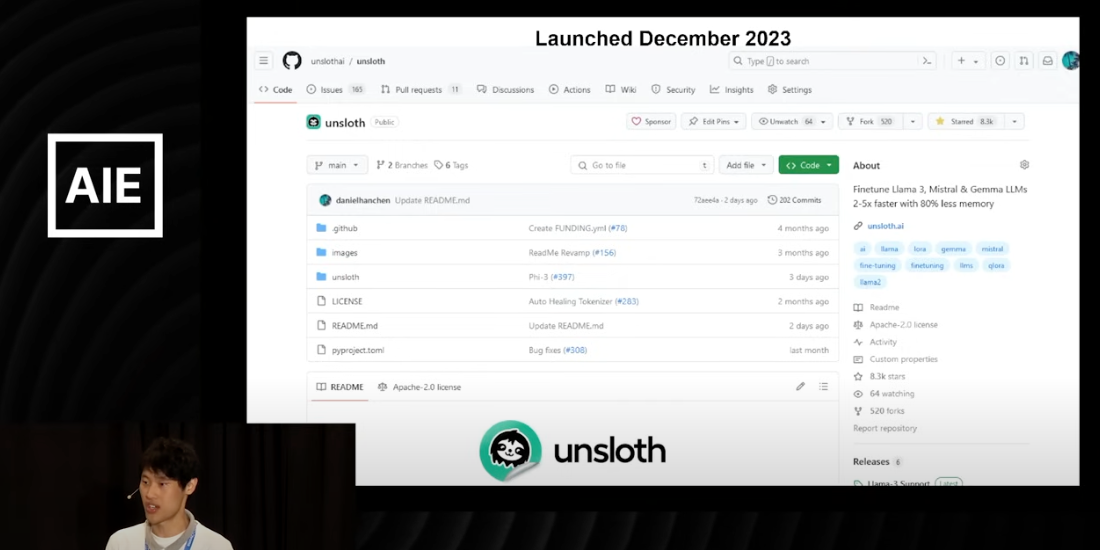

Unsloth

Unsloth, built by Daniel Han Chen, who was previously a Nvidia engineer, is designed to dramatically improve the speed and efficiency of LLM fine-tuning. It allows you to fine-tune Llama 3.1, Mistral, Phi & Gemma LLMs 2-5x faster with 80% less memory usage compared to FA2 (Flash Attention 2).

Unsloth achieves these improvements without degradation of accuracy, since it doesn’t rely on approximation or quanization. Instead, the Unsloth team achieved the improvement in speed with a fast, custom attention implementation in Triton, OpenAI’s high-level language for GPU kernels.

The main goal of Unsloth is to make it possible for everyone to fine-tune their language models, even with very limited GPU resources. Consider using Unsloth if you only have access to smaller or older GPUs.

So for example, if you are trying to fine-tune something on the free tier of Google Colab, which gives you a single Tesla T4 GPU, then Unsloth might be the the choice for you. (Note that Unsloth does not support multi-GPU training, so if you have a large GPU cluster, you should Axolotl instead.)

Torchtune

Torchtune is a PyTorch-native library for easily fine-tuning LLMs. It offers a lean, extensible, abstraction-free design that’s just pure PyTorch.

The library is designed with memory efficiency in mind, with recipes tested on consumer GPUs with 24GB VRAM. Torchtune provides excellent interoperability with popular libraries across the PyTorch ecosystem, as well as recipes for parameter-efficient techniques like qLoRA and LoRA, in addition to full fine-tuning.

Torchtune is an excellent choice if you prefer working directly with PyTorch without additional abstractions, need flexibility and extensibility in your fine-tuning pipeline, or want to leverage a wide range of integrations with popular AI tools and platforms.

Conclusion

The choice between these tools ultimately depends on your specific requirements, hardware constraints, and level of expertise.

In the vast majority of cases, and especially if you are a beginner, we recommend using Axolotl.

To fine-tune with Axolotl using Modal, check out our fine-tuning starter code.