Product Updates: Updates to Volumes, JS and Go SDKs, and more

🗃️ Volumes v2 now in Beta

Volumes v2 is now in open beta and available for all Modal apps. We’ve updated the filesystem to support higher throughput, improved random-access performance, and true high-concurrency writes from hundreds of containers at once.

There’s also no longer a limit on the number of files you can store, making Volumes ideal for managing large datasets, model checkpoints, and training artifacts.

Check out the Volumes v2 docs for more.

💡Modal SDKs for Javascript and Go are now in Beta

The Modal SDKs for JavaScript/TypeScript and Go are now in beta with v0.5. This release brings a unified Client object for interacting with Modal resources, support for Sandboxes, Functions, Images, and Volumes, and extended docs and examples.

Read the docs for more.

👩💻 Client Updates

Run pip install --upgrade modal to get the latest client updates. Here are some highlights from the changelog:

- Introduced App tags for adding key-value metadata to your Apps for better organization and cost tracking

- Added Sandbox Connect Tokens for making secure HTTP and WebSocket connections to Sandboxes using authenticated requests

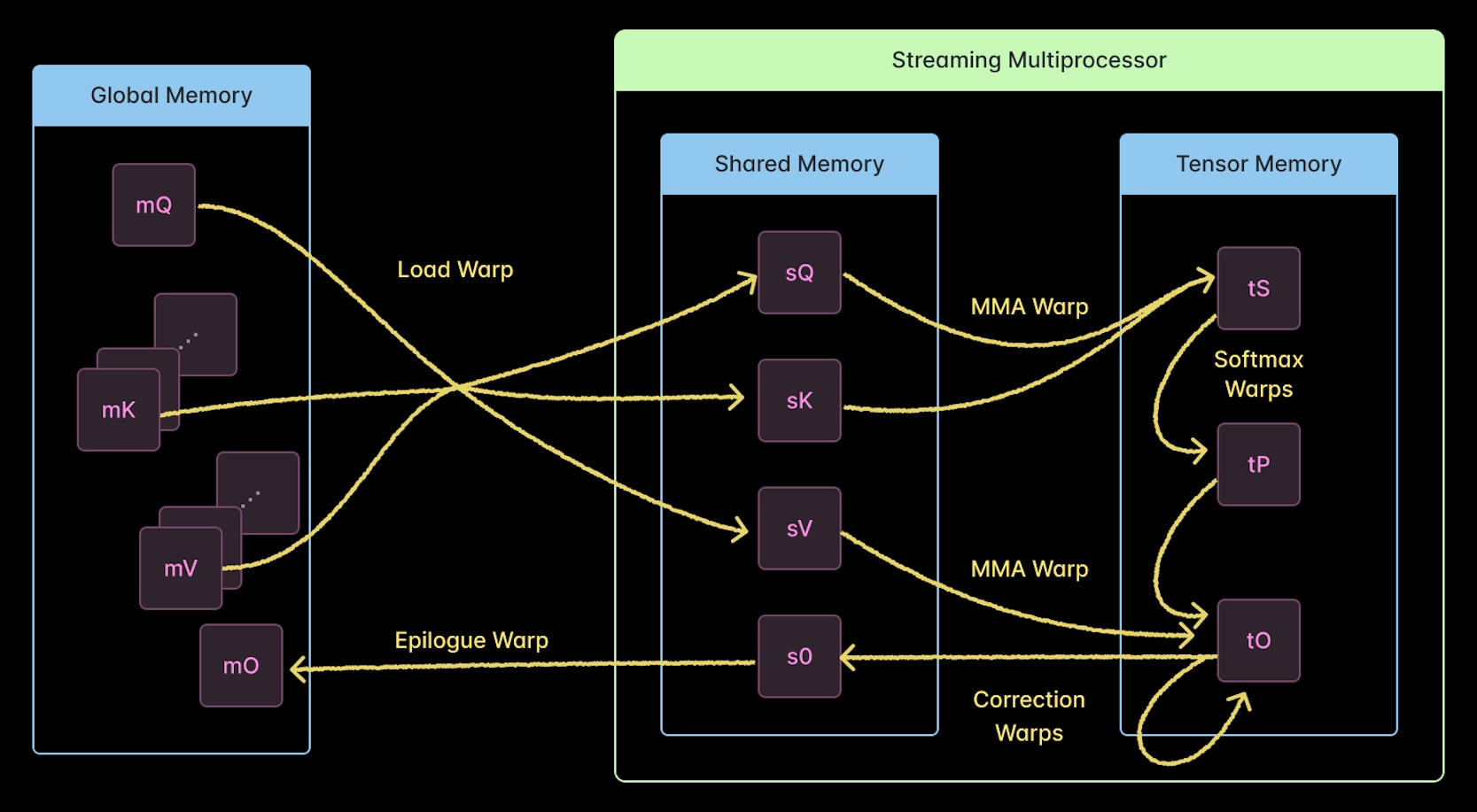

⚡️ Reverse engineering Flash Attention 4

Nvidia’s new Flash Attention 4 kernel delivers up to 20% faster Transformer attention on Blackwell GPUs. Since there’s no paper yet, we dug into the source to explain how it works, from async pipelines to clever math tricks for exponentials and softmax.

Read the full breakdown here.

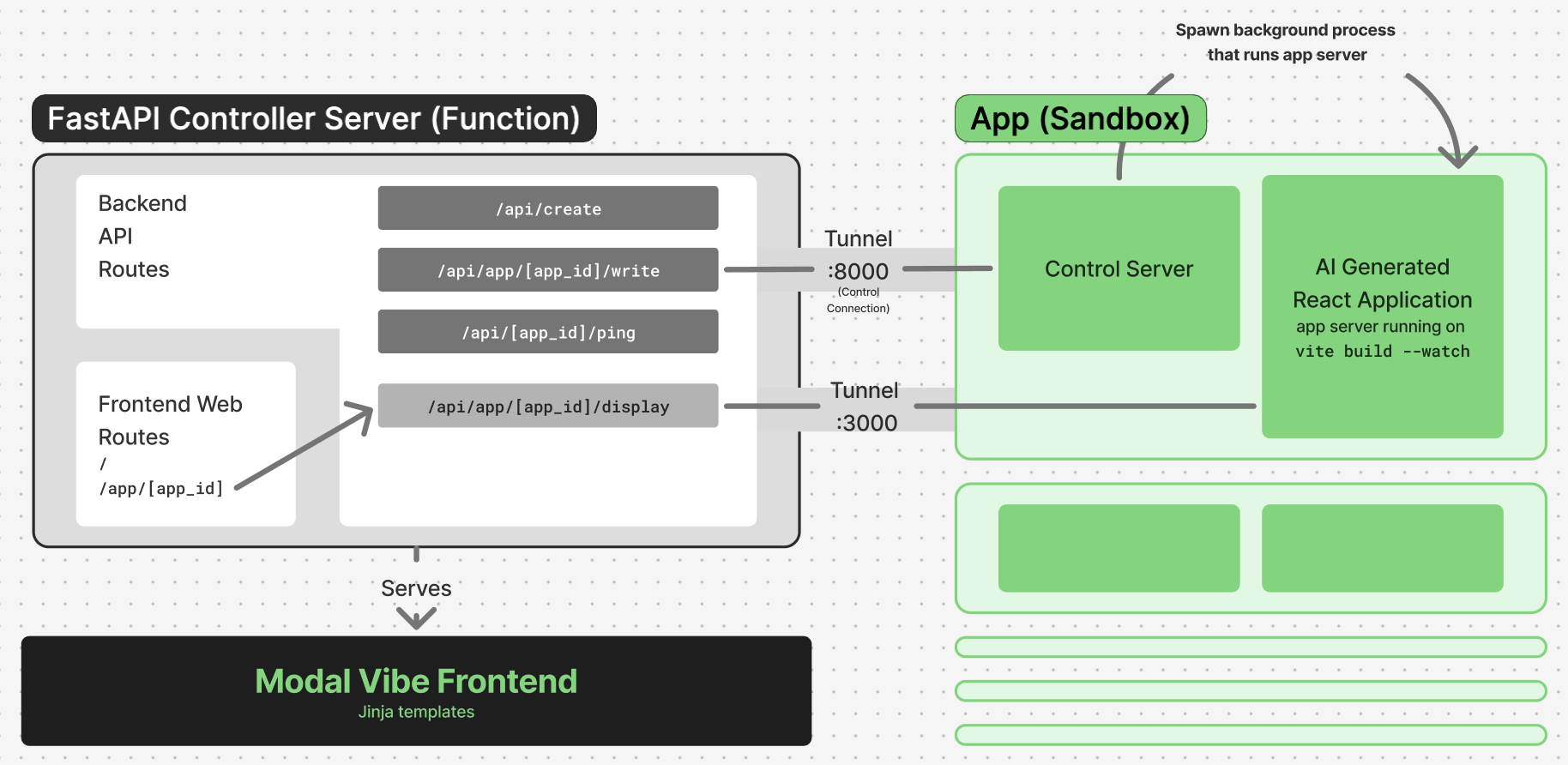

🪩 Modal Vibe: Build an AI coding platform that scales

Modal Vibe is a new, open-source demo that shows how to build an AI coding platform that can scale. It lets users prompt an LLM to generate sandboxed web apps that each run inside a Modal Sandbox and connect to a React UI through Modal Tunnels. The system can scale from zero to thousands of running apps in just minutes.

Read the blog post for performance results and architecture details, or explore the repo to learn more.

🌎 How Meta, Cognition, and Restate are building with Modal Sandboxes

Across research and production, teams are using Modal Sandboxes to securely run code at scale, from world-model research to fast, agentic coding systems.

- The team at Meta FAIR used Sandboxes as part of their reinforcement learning setup for the recently-released Code World Model (CWM), a 32B-parameter open-weights LLM for code generation research. Read the paper →

- Cognition launched its Fast Context subagent this month with a playground built on Modal Sandboxes, enabling instant, containerized comparisons between SWE-grep, Claude Code, and others — designed to feel local in the browser. Read more →

- In their latest guide, the team at Restate shows how to build a durable, serverless coding agent using Sandboxes for execution, Restate for orchestration, and GPT-5 as the LLM. Read the guide →

Upcoming Events

Modal is on the move! Meet the team, grab some swag, and talk shop about AI infra, research, and everything in between.

- 💥 AI Engineer Code Summit (Nov 19-21, NYC)

- ☁️ AWS re:Invent (Dec 1-5, Las Vegas)

- 🧠 NeurIPS 2025 (Dec 2-7, San Diego)

👉 Check out our event calendar to RSVP or book a 1:1 with the Modal team at any of these events.