LiveKit vs Vapi: which voice AI framework is best in 2025?

LiveKit Agents and Vapi are both voice AI agent frameworks, each catering to different audiences and featuring distinct product designs. LiveKit Agents is an open-source framework for building entirely custom voice (and video) agent applications; it’s designed for developers looking to build any voice agent (or WebRTC) platform, including niche cases with unique arrangements. Vapi, meanwhile, is more of a “turnkey” closed source solution that’s focused on common tropes of voice agent products, with some extended customizability to the developer.

Recently, we compared LiveKit Agents with its biggest competitor, Pipecat (blog post coming soon). LiveKit and Pipecat are very similar; they are both open-source, offer key primitives for voice AI, and are focused on orchestrating common WebRTC strategies. LiveKit and Vapi, meanwhile, are in the same broad category, but deviate considerably in API and SDK design.

Perhaps a childish analogy, but LiveKit is the LEGOs of voice AI. There might be an instruction manual on what to do with it, but it’s otherwise an un-opinionated framework that expects developers to be creative. Vapi, meanwhile, is the Playmobil alternative—it’s flexible and allows for creative strategies, but generally expects a limited set of configurations.

Today, we’ll discuss how LiveKit and Vapi compare at a feature-level depth, detailing the products’ focuses, design decisions, constraints, pricing, and ideal customer profiles.

The tl;dr—what are the high-level differences between LiveKit and Vapi?

It’s easy for a comparison between two developer frameworks to get caught in the weeds, especially with a topic as complex as WebRTC and voice AI agents. So, before diving in further, let’s summarize some of the common themes that LiveKit and Vapi differ on:

- LiveKit is more developer-oriented than Vapi. It is an open source framework with multiple discrete parts, is fairly un-opinionated, and possesses a massive community fostered by its OSS license.

- Vapi is significantly more telephone-focused than LiveKit. Telephony is one of the hardest components of voice AI since traditional phone lines use older standards that weren’t originally designed for WebRTC or interoperability. Accordingly, Vapi creates multiple helper classes to simplify telecommunication. LiveKit still supports telephony, and includes a lot of coverage for telephony, but doesn’t abstract telephony concepts as much as Vapi.

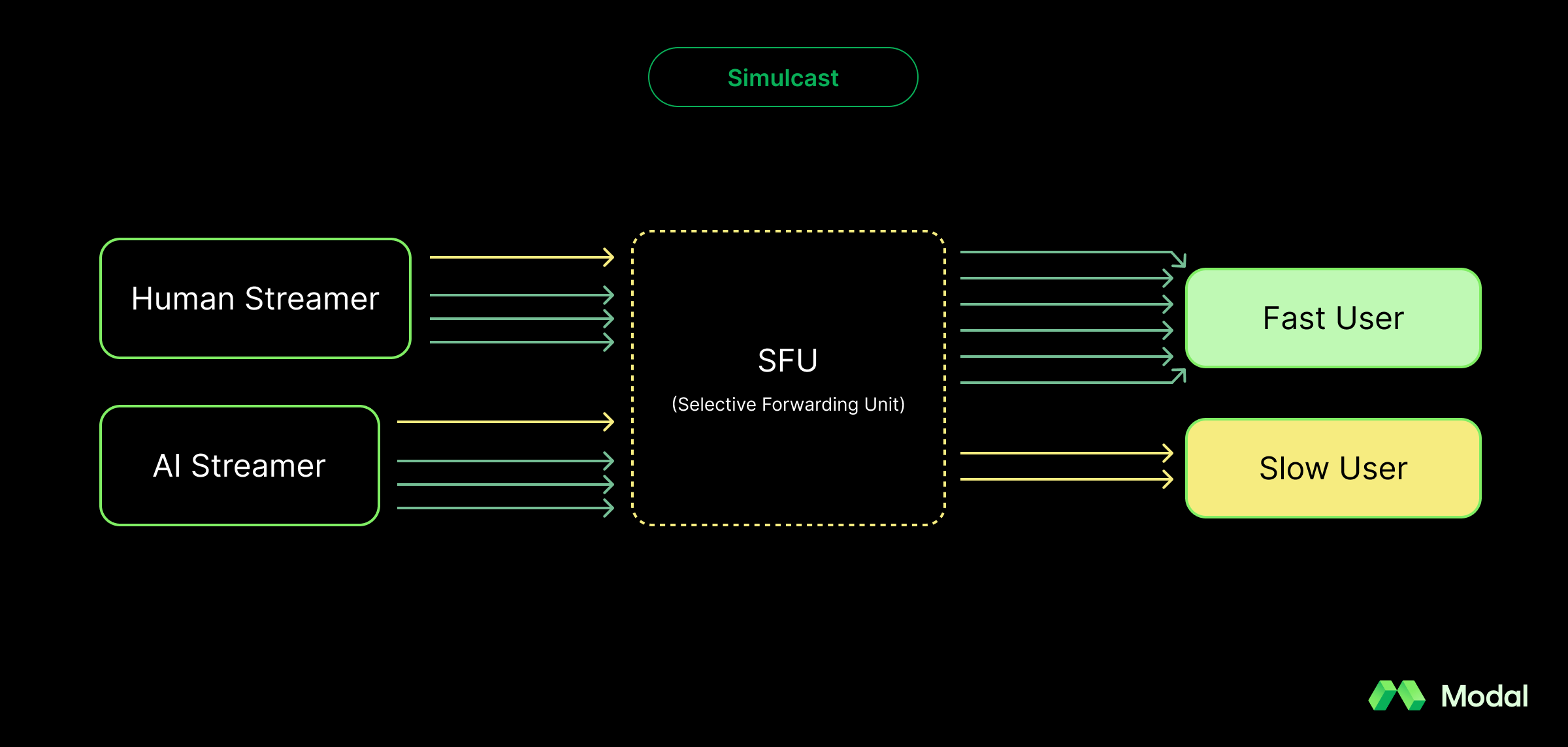

- LiveKit and Vapi both use proprietary transport networks, but LiveKit’s uses Selective Forwarding Units (SFUs) built atop WebRTC. This is particularly scalable to multiple participant problems, something that traditional WebSockets would struggle with. Vapi, optimizing for more simple voice AI use cases, leverages WebSockets for bi-directional communication.

- LiveKit exclusively can support video. LiveKit’s superior networking layer is what enables it to support video, something that isn’t possible with Vapi, which uses compressed, low-band audio codecs for transmission. That said, these audio codecs are consistent with the codecs needed for telephony.

As we run through the line-by-line differences of LiveKit and Vapi, please keep these high-level distinctions in mind.

What are voice AI agents?

Today, many applications rely on voice AI agents. Voice AI agents are autonomous systems that listen to speech audio, reason on a response, and then produce a verbal output. Voice AI agents have broad uses, including customer support (e.g. airline hotlines), virtual personal assistants (e.g. Alexa by Amazon), sales training applications (e.g. SecondNature), and virtual character applications (e.g. Fortnite’s Darth Vader experiment).

Regardless of the use case, voice AI agents are complex. They require multiple discrete AI models, including speech-to-text (STT), voice activity detection (VAD), language processing via large language models (LLMs), and text-to-speech (TTS). Furthermore, these models need to be orchestrated in real-time with different transports in order to produce a fluid conversational experience. Both LiveKit and Vapi help developers build these pipelines by integrating with SoTA models in each category.

Who was Vapi designed for?

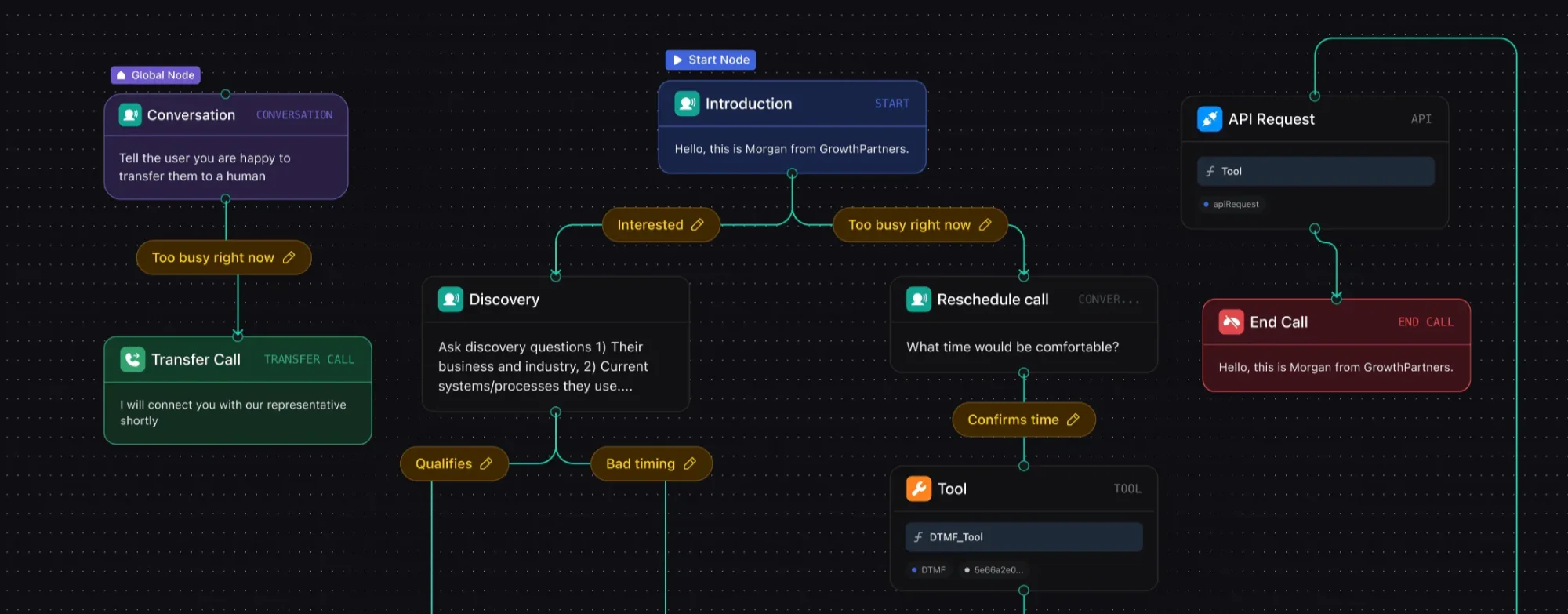

Generally speaking, Vapi is a developer-oriented platform. However, Vapi operates at a higher level of abstraction and was specifically designed for developers that are seeking a more opinionated, out-of-the-box framework for common voice AI patterns. For example, a median Vapi user might be building an appointment scheduling bot, something that Vapi has a step-by-step tutorial on how to create. Vapi also ships with a workflow visualization tool, making it easy for developers to work off a templated structure and extend it for their needs.

Who was LiveKit designed for?

LiveKit is an open-source framework, making it even more developer-friendly than Vapi. LiveKit’s framework can be modified, extended, self-hosted, or even re-packaged into a broader product. LiveKit has additional sub-products that collectively form the LiveKit ecosystem—LiveKit Servers (media server that relays packets to the voice AI agent), LiveKit Agents (the actual framework for creating the agent that interfaces with the audio communication), and LiveKit SIP (for bridging to old-fashioned telephones). LiveKit’s piecemeal and extensible design makes it ideal for complex voice AI products that might need to optimize their design for scale.

LiveKit’s primary business model is their managed LiveKit Cloud product (hosted LiveKit Servers) and their proprietary transport network, something that all LiveKit Servers (even if self-deployed) have to leverage.

Are LiveKit and Vapi open-source?

LiveKit is an open source product. While LiveKit’s framework requires developers to use LiveKit’s proprietary WebRTC SFU mesh, LiveKit’s framework itself is open source. This includes LiveKit Agents and LiveKit Servers.

Vapi, meanwhile, is a closed source product, where developers need to invoke Vapi’s core services via its API. Vapi does open source its example repos, but that doesn’t mean that Vapi is an OSS company as its core product remains proprietary.

6 core advantages of LiveKit

There are many advantages of LiveKit. Most involve LiveKit extending full customizability to developers at various turns (pun intended, but if you didn’t get it, don’t worry) of the WebRTC experience. We decided to focus on the six most interesting ones, including pricing as one.

1. LiveKit treats rooms as first-class concepts

LiveKit has primitive constructs for rooms, participants, and audio tracks, with functions to list, mute, and remove participants as well as set permissions and authorization tokens. This is partially because LiveKit is an open source product—everything is exposed to the developer to configure by design.

This extensibility makes LiveKit an ideal framework for building complex voice AI applications like a Zoom-like or e-conference style application. Additionally, LiveKit is also an excellent framework for non-voice AI applications, competing with Daily.co or Twilio.com.

2. LiveKit is ideal for streaming use cases

While Vapi focuses on two-way communications (evidenced by its choice of WebSockets), LiveKit is designed for all types of communications, including cases where one or a few users are streaming packets to potentially thousands of users. LiveKit supports Simulcast, where stream quality is downscaled for subscribers with poor bandwidth, and Dynacast, where unused layers are paused to save CPU. LiveKit tracks can also be end-to-end encrypted, something that is critical to organizations that are streaming proprietary content (e.g. shareholders meeting with voice AI assistants).

That said, Vapi’s WebSockets still can support data where packets might need to be dropped, but LiveKit’s choice of WebRTC and SFUs is better at scale because transport between any two arbitrary users has redundant paths to minimize loss.

3. LiveKit has better turn-taking

Turn-taking is a difficult sub-problem for voice AI agents. Turn-taking involves a voice AI agent assessing if it’s their turn to speak. Typically, this decision is delegated to an independent Voice Activity Detection (VAD) model.

While Vapi has some turn-taking functionality, which Vapi has branded as smart endpointing, LiveKit, exposes an entire framework to configure turn-taking to a developer’s needs. With LiveKit, developers can configure their STT, LLM, TTS models, their VAD detector, and create custom logic for handoffs. For instance, it would be easy for developers to animate a visual cue to indicate that the model is starting to think it’s their turn. Additionally, turn-taking is easily configurable with LiveKit’s allow_interruptions and interrupt() settings.

4. Telephony primitives

While Vapi ships with more telephony abstractions, LiveKit has telephony primitives, including SIP trunks (that make it possible to configure communications with business phones), agent dispatch where agents are explicitly dispatched to a telephone call, and call transfers. These are still features available in Vapi, but with less configurability.

5. LiveKit supports video!

While Vapi is exclusively an audio platform, supporting audio-only codecs like pcm_s16le, LiveKit also supports video, flaunting that it can support technology that’ll rival Zoom. LiveKit makes this possible by support H.264 and V9 codecs, having an incredible SFU transport network, and pre-built video applications open sourced as LiveKit Meet.

6. Flexible pricing

Unlike Vapi, which just features a binary simple and enterprise tier, LiveKit includes multiple tiers including a free tier to match the needs of the project. LiveKit’s free tier still allows for a single Voice AI agent and a 1,000 free minutes, which is ample for testing. Being open source, LiveKit Agents can also be self-deployed. However, LiveKit Agents still need to use LiveKit Server on the WebRTC transport front, and for most use cases it isn’t practical to self-host the Server itself.

5 core advantages of Vapi

While LiveKit has a more extensible experience for developers, Vapi still features some considerable advantages for certain projects.

1. Easy call handling

Unlike LiveKit, which is based around primitives of a room, Vapi has pre-built functions for easy phone call and web call handling. This includes easy call transferring, live call control where content could be injected dynamically, and multi-provider voicemail detection (with a native provider available).

2. Built-in phone numbers

Unlike LiveKit which forces users to use an external provider like Twilio or Telnyx, Vapi can directly provision numbers and assign them to AI agents. This is particularly important to applications that need to create numbers programmatically. Additionally, Vapi still integrates with Twilio and Telnyx if users already have provisioned numbers on those platforms.

3. Fantastic tutorials

Vapi has amazing tutorials that document how to build an end-to-end voice agent application with Vapi. These include tutorials for support agents, voice widgets, documentation agents, and multi-lingual agents. Vapi is ideal for users that want to build an application that isn’t too complex and is consistent with one of these use cases.

4. Visual workflows GUI

Vapi has a Workflows Editor that visualizes the flow of conversation through a series of programmatically instantiated nodes. This makes Vapi ideal for applications that are building phone trees. However, this approach contrasts with LiveKit’s state-focused philosophy, that frames the idea of workflows around state instead of a directed graph tree.

5. Built-in call analysis

While LiveKit has excellent telemetry for collecting raw data, Vapi has built-in call notetakers that can be exposed to users to summarize the contents of a call without externally tapping an LLM.

A closing thought: deployment

Because Vapi is closed source and proprietary, the only way to get started is by choosing the right Vapi plan and signing up for their product.

Because LiveKit is open-source, you can deploy LiveKit Agents anywhere. A developer-friendly option is Modal (we’re biased, but we’re about to tell you why).

Modal is an AI infrastructure platform for running and scaling compute-intensive workloads. With our Python SDK and extremely fast cold starts, you can deploy your AI application to cloud GPUs in seconds. Modal manages the orchestration of compute and autoscales based on request volume. In short, Modal helps developers ship responsive AI applications that have spiky workload profiles—such as voice (and video) AI agents! Interested?

Learn how to deploy LiveKit Agents in minutes with Modal.