How to deploy a Gradio app

What Is Gradio?

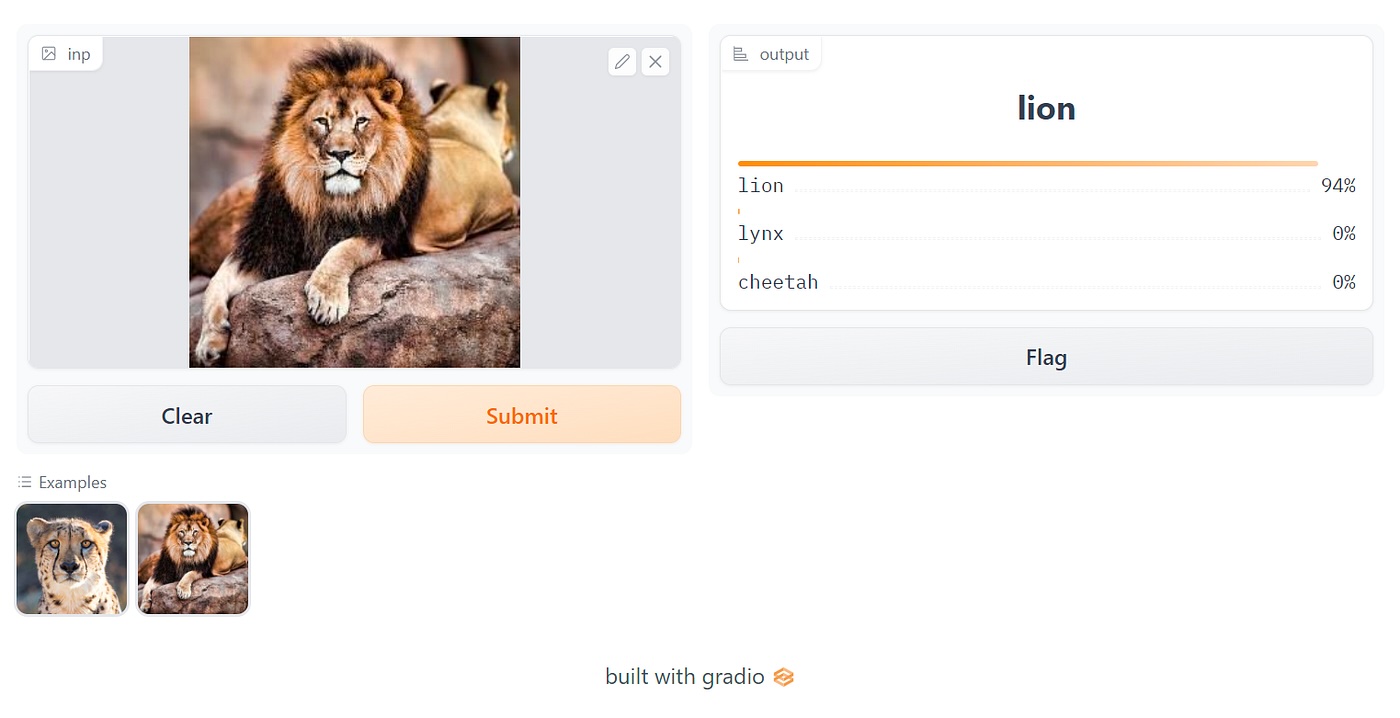

Gradio is an open-source Python library that allows developers to create intuitive web interfaces for their ML models with just a few lines of Python code. Whether you’re working with text, images, audio, or video, Gradio simplifies the process of making your models interactive and accessible.

Key Benefits of Gradio:

- Rapid prototyping of ML model interfaces

- Support for various input and output types

- Easy integration with popular ML frameworks

- Customizable UI components

Why Deploy Gradio in the Cloud?

While Gradio is excellent for local development, if you want to share your demo with someone, you’ll probably need to deploy it in the cloud. Enter Modal!

Introducing Modal: Simplifying Cloud Deployment for Gradio

Modal provides a serverless platform that makes deploying Gradio interfaces in the cloud a breeze. With Modal, you can have your Gradio app up and running in minutes, without the hassle of managing infrastructure. Moreoever, you can also fine-tune your ML models and store their weights on Modal, making it a one-stop solution for all your ML deployment needs.

Step-by-Step Guide to Deploying Gradio on Modal

Let’s walk through the process of deploying a Gradio interface on Modal.

Step 1: Create Your Gradio Application

First, create a file named gradio_app.py with the following code:

This script creates a simple Gradio interface with a greeting function that takes a name and intensity as inputs.

Step 2: Deploy Your Gradio App on Modal

To deploy your Gradio app on Modal, simply run:

Modal will process your deployment and provide you with a URL where your Gradio interface is now live and accessible to anyone.

Why Choose Modal for Your Gradio Deployment?

Modal offers several advantages for deploying Gradio interfaces:

- Serverless Architecture: No need to manage servers or worry about scaling.

- Rapid Deployment: Get your demo online in minutes.

- Cost-Effective: Pay only for usage - if no one uses your app, you don’t pay.

- Easy Updates: Modify your Gradio interface and redeploy with a single command.

- Concurrent Request Handling: The deployment script is configured to handle multiple requests efficiently.

Conclusion: Elevate Your ML Demos with Gradio and Modal

By combining Gradio’s intuitive interface creation capabilities with Modal’s efficient cloud deployment solution, you can take your machine learning demonstrations to the next level.

Start deploying your Gradio interfaces with Modal today, and experience the future of ML model demonstration and collaboration!

Other Resources

For a more detailed example of how to deploy a Gradio app on Modal that serves as a demo frontend for a finetuned ML model, check out the Modal example.