Limitations of AWS Lambda for AI Workloads

AWS Lambda is the serverless platform that brought Functions-as-a-Service (FaaS) into the mainstream when it launched in 2014. While not quite the first (that honor goes to PiCloud in 2010), it has become the go-to platform for any developer looking to deploy event-driven functions, simple API endpoints, or lightweight microservices without managing infrastructure.

But try and add ML inference or fine-tuning to that list and you’ll start to see the limitations of the product. AWS Lambda was made for a different time, before GPUs became essential infrastructure for every application and before AI workloads moved from research labs to production APIs.

No GPU support, pricing that penalizes long-running computations, a cumbersome development experience, and execution timeouts that make it unsuitable for many compute-intensive workloads. These are all reasons developers turn away from a platform they have known for over a decade towards new serverless providers that are purpose-built for AI.

AWS Lambda GPU Support—or Lack Thereof

You might immediately assume that this is due to organizational inertia. “A juggernaut like Amazon can’t innovate fast enough!”

But the reason is deeper than that, and a fundamental limitation within the Lambda architecture.

Lambda uses Firecracker for its virtualization layer. Firecracker was explicitly designed to be minimalist, stripping away everything not essential for running stateless, event-driven workloads. This means no hardware accelerators, no PCIe passthrough capabilities, and, crucially, no GPU support. And there are no plans to change this as adding GPU support would require fundamental changes to Firecracker’s design philosophy.

This leaves Lambda users who need GPU acceleration in an awkward position. Developers must either architect complex workarounds using SageMaker endpoints or Batch jobs, accept the operational overhead of managing EC2 instances, or look beyond AWS entirely.

GPU Support With Modern Serverless Infrastructure

Modern platforms like Modal solve this by offering native GPU support across the entire NVIDIA lineup, from T4s for inference to B200s for training. You simply specify the GPU type in a function decorator and Modal handles the rest. No EC2 instances to manage, no complex orchestration. The same serverless experience you expect, now with the compute power AI workloads require.

The High Costs of AWS Lambda

AWS Lambda’s pricing model becomes prohibitive for sustained or compute-intensive workloads.

For every function execution, Lambda charges you:

- $0.0000166667 per GB-second of compute time

- $0.20 per million requests

CPU power is allocated proportionally to memory. A request of 1,769 MB is allocated the equivalent of 1 vCPU.

The first 400,000 GB-seconds and 1 million requests per month are free. Initially, this pay-per-use model looks attractive.

But production workloads blow past these limits quickly. Consider a typical data processing workload: 2 million JSON files per month, where each job needs 10GB of memory and runs for 1 second. On Lambda, this costs $326.87 monthly.

The billing model actively discourages good architectural patterns. Batching work into longer-running functions should reduce overhead, but can increase costs due to per-second billing. Running a function for 5 minutes costs the same whether it processes one item or thousands. Yet you still pay invocation costs for each trigger.

This creates situations where the most cost-effective approach conflicts with clean architecture. Developers find themselves splitting functions unnaturally or avoiding optimizations that would make sense anywhere else.

Costs on Modern Serverless Infrastructure

If you run this on Modal, the same workload costs $87.76 after free credits. Modal’s unit pricing is significantly cheaper than Lambda’s:

- $0.00000222 per GB-second of compute time

- $0.0000131 per CPU-second of compute time (equivalent to 2 vCPU)

- no additional cost per request

The example earlier, which consumes 10GB memory and 2.8 physical cores (using the Lambda vCPU:memory allocation ratio above), costs only $117.76 on Modal before credits, with no per-request charges.

Why Developers Struggle with AWS Lambda’s Development Experience

Lambda’s development workflow creates friction at every step.

AWS Lambda Cold Start Delays Impact User Experience

Cold starts add unpredictable latency, from hundreds of milliseconds to several seconds. Python functions with ML dependencies? Ten-second delays are common. The “solutions” aren’t ideal: provisioned concurrency defeats the purpose of serverless, and warming functions with scheduled pings wastes money without guaranteeing performance.

AWS Lambda package Size Limits Restrict Library Usage

Lambda’s 250MB deployment limit means popular libraries don’t fit. PyTorch alone exceeds the limit (not that you can use CUDA without GPU support).

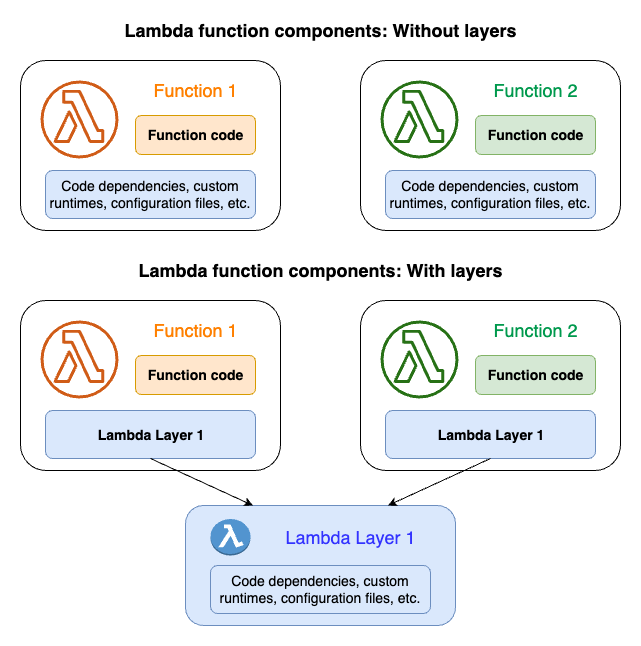

Container images allow 10GB but this can make cold starts even worse. The layer system caps you at five layers, each under 250MB. Update one shared layer? Redeploy every function that uses it.

Debugging AWS Lambda Functions Requires Multiple Tools and Deployments

No SSH. No shell access. Very limited live debugging. Just CloudWatch logs. Local testing tools like SAM CLI approximate Lambda’s environment but miss edge cases. Most developers end up deploying to production just to debug basic issues.

AWS Lambda’s Stateless Design Increases Development Complexity

Every function starts fresh. Models must reload from S3, potentially adding minutes to cold starts. The /tmp directory gives you 10GB, but it vanishes between invocations. Want to share data between functions? That’s another service, more latency, more complexity. Simple workflows become distributed systems problems.

The Better DX of Modern Serverless Infrastructure

Modal is designed to eliminate these friction points with a developer-first approach. Write normal Python code, add a simple @app.function() decorator, and test it locally with modal run. When you’re ready to deploy the function, simply use modal deploy in your CLI.

Here’s what makes Modal DX significantly better:

- No cloud consoles, config files, separate deployment packages, or layer management. Just

pip installyour dependencies in the image definition and attach it to your function—all in your application code. - No image size limits.

- Under the hood, Modal’s custom Rust-based filesystem lazy loads images to drastically reduce cold starts. Even containers with large ML packages boot in seconds.

- Native distributed file system (Modal Volumes) that can be easily attached to functions across your environment.

- Native debugging and observability features. Debug with interactive shells using

modal shell. The same code runs locally and in production, with logs streaming to your terminal in real-time. Modal’s native dashboards make it much easier to monitor the health of deployed functions, too.

AWS Lambda Max Timeout

Lambda’s hard 900-second execution limit makes entire categories of workloads impossible.

Video transcoding, model training, large-scale data processing. These tasks need hours, not minutes. A 4K video transcode easily takes 30 minutes. Processing a few gigabytes of complex data hits the wall. Any function that runs longer than 15 minutes is terminated, no exceptions.

If you need to process a million records, you can’t iterate through them in one function. Instead, you must fan out to thousands of parallel invocations or chain functions via Step Functions, adding coordination overhead, error handling complexity, and significant cost from extra invocations. AWS Lambda was designed for “milliseconds to a few minutes” of execution, forcing you to reshape your problem to fit the constraint.

Longer Timeouts With Modern Serverless Infrastructure

Modal functions can run for up to 24 hours, with configurable timeouts from 1 second to 86,400 seconds. This removes the need for complex orchestration patterns:

Your video transcoding, model training, or batch processing job can run as a single function. If you need even longer execution, Modal supports checkpointing for resumable jobs. The platform was built for AI workloads, not just simple webhook handlers.

Modern Serverless Platforms Solve AWS Lambda’s Fundamental Limitations

AWS Lambda revolutionized serverless computing, but it’s stuck in 2014. No GPU support means no AI workloads. The pricing model punishes longer, compute-intensive tasks. The developer experience remains painful with cold starts, package limits, and debugging headaches. The 15-minute timeout makes real computing jobs impossible.

These aren’t edge cases anymore. Modern applications involve GPU inference, large dataset processing, and complex workflows. Lambda forces you to architect around its limitations rather than solving your actual problems.

Platforms like Modal demonstrate what serverless should be: GPU-native, built for long-running tasks, with a developer experience that just works. Developers don’t want to cram workloads into Lambda’s constraints anymore. They want platforms that adapt to the requirements of new technologies.

Try it out in our Playground or sign up to deploy your first function.